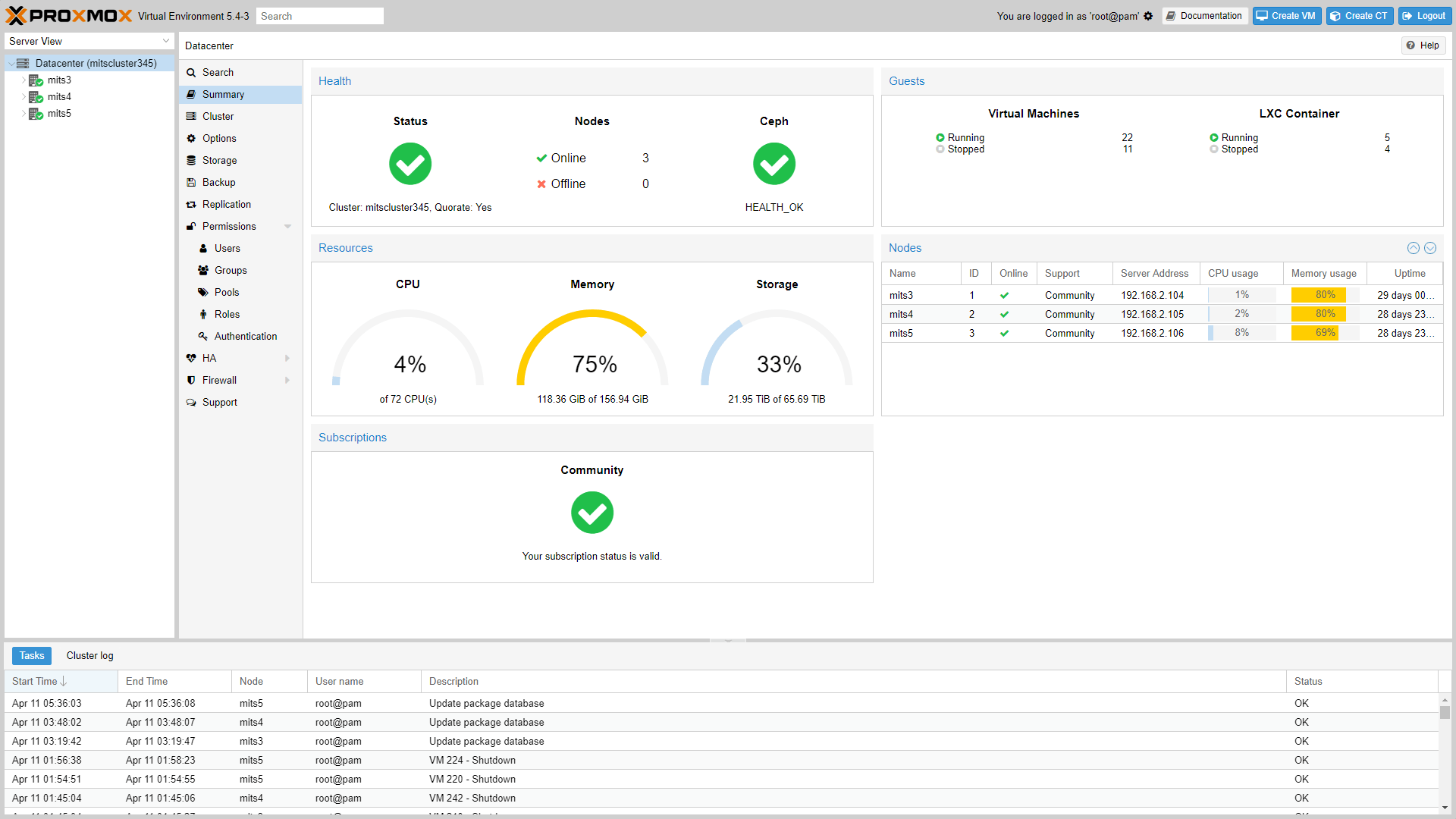

Proxmox VE is een opensourceplatform voor virtualisatie gebaseerd op kvm en lxc-containers. Het kan via een webinterface worden beheerd, en daarnaast zijn een commandline en een rest-api beschikbaar. Voor meer informatie verwijzen we naar deze pagina en verschillende videotutorials kunnen op deze pagina worden bekeken. Het geheel wordt onder de agpl uitgegeven. Versie 6.2 is uitgebracht met de volgende veranderingen:

Proxmox VE is een opensourceplatform voor virtualisatie gebaseerd op kvm en lxc-containers. Het kan via een webinterface worden beheerd, en daarnaast zijn een commandline en een rest-api beschikbaar. Voor meer informatie verwijzen we naar deze pagina en verschillende videotutorials kunnen op deze pagina worden bekeken. Het geheel wordt onder de agpl uitgegeven. Versie 6.2 is uitgebracht met de volgende veranderingen:

Proxmox VE 6.2Web interface

- Based on Debian Buster (10.4)

- Ceph Nautilus (14.2.9)

- Kernel 5.4 LTS

- LXC 4.0

- Qemu 5.0

- ZFS 0.8.3

Container

- Allow to deploy DNS based Let's Encrypt (ACME) certificates

- Allow admins to see the full granted permission/privilege tree for a user

- Add experimental Software Defined Network (SDN) GUI and documentation, based on modern mainline Linux Kernel network technology

- Allow to collapse the Notes sub-panel in the guest summary panels. One can do this permanently or automatically if empty through the user settings.

- Add 'Creation Date' column for storage content, allowing one, for example, to comfortably find backups from a certain date

- Add device node creation to Container feature selection

- Full support for up to 8 corosync links

- Automatic guest console reload on VM/CT startup

- Add Arabic translation

- Allow seamlessly changing the language, without logging out and logging in again

Backup/Restore

- LXC 4.0.2 and lxcfs 4.0.3 with initial full cgroupv2 support by Proxmox VE

- Improve support for modern systemd based Containers

- Improve default settings to support hundreds to thousands* of parallel running Containers per node (* thousands only with simple distributions like Alpine Linux)

- Allow creating templates on directory-based storage

Improvements to the HA stack

- Support for the highly efficient and fast Zstandard (zstd) compression

QEMU

- Allow to destroy virtual guests under HA control when purge is set

General improvements for virtual guests

- Fixed EFI disk behavior on block-based storage (see known issues)

- VirtIO Random Number Generator (RNG) support for VMs

- Custom CPU types with user-defined flags and QEMU/KVM settings

- Improved machine type versioning and compatibility checks

- Various stability fixes, especially for backups and IO-Threads

- Migration:

- Enable support for Live-Migration with replicated disks

- Allow specifying target storage for offline mode

- Allow specifying multiple source-target storage pairs (for now, CLI only)

- Improve behavior with unused disks

- Secure live-migration with local disks

Cluster

- Handle ZFS volumes with non-standard mount point correctly

Ceph

- Improve lock contention during high frequency config modifications

- Add versioning for cluster join

- Enable full support for up to 8 corosync links

Storage

- Easier uninstall process, better informing which actions need to be taken.

User and permission management

- Storage migration: introduce allow-rename option and return new volume ID if a volume with the same ID is already allocated on the target storage

- Support the 'snippet' content type for GlusterFS storage

- Bandwidth limitations are now also available for SAMBA/CIFS based storage

- Handle ZFS volumes with non-standard mount point correctly

- Improve metadata calculation when creating a new LVM-Thin pool

- Improve parsing of NVMe wearout metrics

Documentation

- LDAP Sync users and groups automatically into Proxmox VE

- LDAP mode LDAP+STARTTLS

- Allow to add and manage authentication realms through the 'pveum' CLI tool

- Full support and integration for API Tokens

- Shared or separated privileges

- Token lifetime

- Revoke it anytime without impacting your user login

Various improvements

- Update hypervisor migration guide in the wiki

- Reducing number of Ceph placement groups is possible

- Improve layout, use a left-side based table-of-content

Known Issues with OVMF/UEFI disks

- Firewall: make config parsing more robust and improve ICMP-type filtering

An EFI disk on a storage which doesn't allow for small (128 KB) images (for example: CEPH, ZFS, LVM(thin)), was not correctly mapped to the VM. While fixed now, such existing setup may need manual intervention:

- You do not have to do anything if your EFI disks is using qcow2 or "raw" on a file based storage.

- Before the upgrade, make sure that on your ESP, the EFI boot binary exists at

\EFI\BOOT\BOOTX64.EFI(the default EFI Boot fallback).

- Windows and some Linux VMs using systemd-boot should do that automatically

- If you already upgraded and it does not boot, see OVMF/UEFI Boot Entries on how to recreate the boot entry via the OVMF Boot Menu

:strip_exif()/i/2003599062.png?f=thumbmedium)