Introduction: problems of modern cores

Note: Links appearing in this article point mostly to articles in Dutch on this site. In many cases, you can find the information that is referred to in English by clicking on the link labeled 'bron' ('source') underneath the title on the page to which you are taken.

Over three years ago, Sun started releasing information on a rather precocious new concept for server processors. The idea was, instead of trying to accomplish a single task very quickly, to do a lot of them at the same time and achieve a decent nett performance that way. The first result of this drastic change of direction is the UltraSparc T1 'Niagara', which was introduced at the end of last year. In this article, we dissect the vision behind this processor, look at the servers that Sun built around it, and most of all, we shall test to what extent this concept is useable for a website database like the one used here at Tweakers.net. A 'traditional' server with two dualcore Opterons will be used for comparison. Additionally, we shall capitalize on the opportunity and look at the differences between MySQL and PostgreSQL on the one hand, and between Solaris and Linux on the other.

Over three years ago, Sun started releasing information on a rather precocious new concept for server processors. The idea was, instead of trying to accomplish a single task very quickly, to do a lot of them at the same time and achieve a decent nett performance that way. The first result of this drastic change of direction is the UltraSparc T1 'Niagara', which was introduced at the end of last year. In this article, we dissect the vision behind this processor, look at the servers that Sun built around it, and most of all, we shall test to what extent this concept is useable for a website database like the one used here at Tweakers.net. A 'traditional' server with two dualcore Opterons will be used for comparison. Additionally, we shall capitalize on the opportunity and look at the differences between MySQL and PostgreSQL on the one hand, and between Solaris and Linux on the other.

Background: the problem

Background: the problem

Sun's motivation to get started on the Niagara series was the fact that it gets increasingly harder to make conventional cores faster: while it may be simple to add extra muscle in the form of gigaflops, ensuring that power is actually applied in a useful way is a problem on which thousands of smart people wrack their brains on a daily basis. One problem is that other hardware develops at a much slower pace than processors, hence from the core's perspective it takes longer and longer for data to become available from memory. In spite of a good deal of research that has been (and is being) done on minimizing the average access time and/or using this wisely in a different way, the gap still keeps growing.

Not only memory is a bottleneck, code does not tend to be very cooperative either. Instructions are usually dependent on each other in one way or another, in the sense that the output of one of them is necessary as input for the other. Modern cores can handle between three and eight instructions simultaneously, but on average you may call yourself lucky if two can be found that can be executed wholly independently. Often, only one can be found, and regularly there are no instructions at all that can be sent safely into the pipeline at a particular moment. Hand-optimized code is probably better in many cases, but not everything can be fixed with better software: for most algorithms there are clear practical and theoretical limits.

Not only memory is a bottleneck, code does not tend to be very cooperative either. Instructions are usually dependent on each other in one way or another, in the sense that the output of one of them is necessary as input for the other. Modern cores can handle between three and eight instructions simultaneously, but on average you may call yourself lucky if two can be found that can be executed wholly independently. Often, only one can be found, and regularly there are no instructions at all that can be sent safely into the pipeline at a particular moment. Hand-optimized code is probably better in many cases, but not everything can be fixed with better software: for most algorithms there are clear practical and theoretical limits.

Mindlessly driving up clock speed by using increasingly smaller transistors is also no longer an option because customers are becoming aware of the energy demands of their servers. Moreover, the deep pipelines that are necessary for high clock speeds make it difficult to apply the available computing power optimally, hence even a large power budget will not guarantee a race monster that beats the competition in the performance arena. In other words: the simplest tricks to up a core's performance have run out, so bigger investments in complexity need to be done to make relatively small gains.

Explosion of complexity

Explosion of complexity

The problems mentioned above have lead to the situation that modern processors have tens to hundreds of millions of transistors on board that do nothing at all to increase the functionality of the chip, and are present to lower the bottlenecks in the rest of the system. We are not only talking about the cache, but also about functionality to execute instructions in a different order than the developer specified, or to guess that an instruction will branch in a particular direction. Naturally, making mistakes is not an option, so literally hundreds of instructions, including their interdependencies, have to be tracked simultaneously in order to guarantee correct code execution under all circumstances, including the most improbable situations.

Intel, AMD and IBM are all doing their best to address these issues with their new generations of x86- and Power processors. These companies consequently pour vast amounts of money into developing and improving their cores, battling against the increase in complexity at the same time. Meanwhile, alternative paths are being explored: the Itanium series has seen a lot of the logic mentioned above removed from the hardware and moved to the compiler. The reasoning is that the development of more powerful cores will get easier and easier in the long run in comparison to other architectures, while the software gets smarter at the same time. After all, compilers have lots of time to look for parallelism, compared to a processor that has to decide in a split microsecond. In practice there are other considerations that will determine the success of the Itanium approach, but that is better left to a future article.

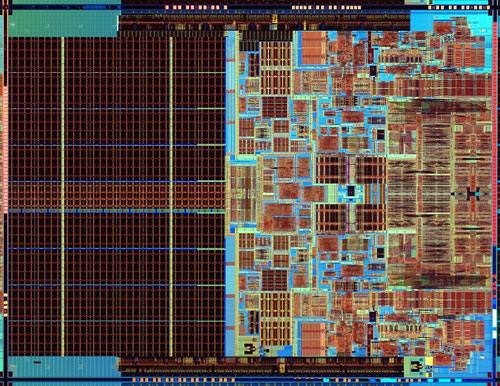

Intel's Core 2-processor: more logic than cache

The UltraSparc T1 'Niagara'

Back to Sun. During the dotcom hype, the company's processors were totally hip. There virtually wasn't a better way to impress investors than by showing off a 19" rack full of Enterprise or Fire servers to them. However, during the course of the last few years, Sun's competitors have walked off with the prizes more and more, which was reflected in consistently lower sales for the company. In 2001, the company sold 6.9 billion dollars worth of Sparc hardware; a 13.7% share of the total server market. In 2005, almost a third of that result had evaporated, in spite of the pain having been soothed the last few years by the sales of x86 servers, among which the Opteron line, which was introduced early 2004.

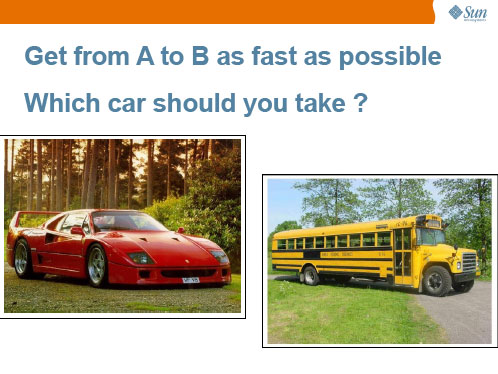

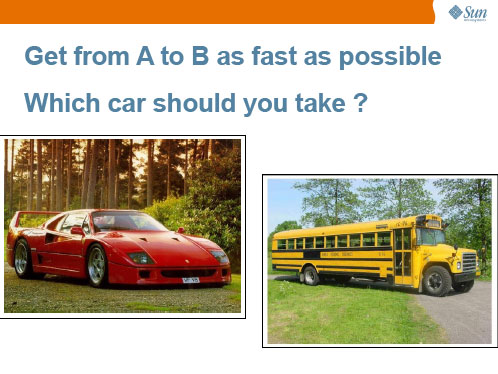

It goes without saying that Sun was not happy with this downward trend, but it did not seem to have much hope either to keep up with the rapid pace at which its rivals were improving their cores. When the company cancelled the UltraSparc V - the evolutionary successor of the current UltraSparc IV+ - it became clear that Sun had thrown in the towel as far as singlethread performance was concerned. This radical step indicated that Sun was betting on Niagara, the codename of a design it got its hands on through the acquisition of Afara Websystems in 2002, for its future as a chipmaker. Originally intended as a network processor, the Niagara architecture does not so much depend on a fast core as on a combination of CMT and CMP - Chip Multi-Threading en Chip Multi-Processing. This concept is known as 'Throughput Computing', or 'CoolThreads Technology' by its current hip official name.. Sun uses to following analogy to illustrate the concept for lay persons:

The answer obviously depends on the number of passengers.

Of course other manufacturers have witnessed the same trends as Sun did, hence the advent of multicores had been widely predicted – and even partially undertaken – before Sun made its strategy public. The difference, however, is that the competition does not approach the issues as radically, and still tries to find a balance between advanced cores and a decent singlethreaded performance, with a moderate degree of duplication of these. For now, most companies agree that two cores per socket is fine, although the quadcores are appearing on the horizon. Sun is not wasting any time and has jumped straight to eight cores with the first product in the series.

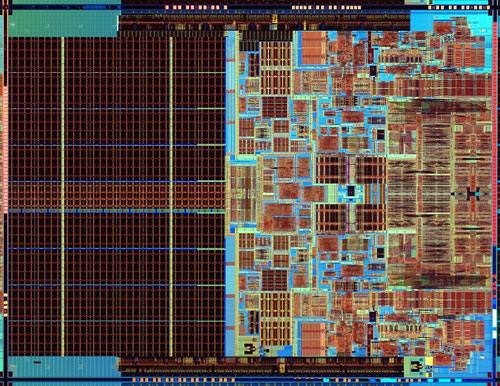

Niagara cores are comparatively simple. Other than multithreading, at the conceptual level there isn't a great deal more going on than in a 486: there is a maximum of one instruction per clock tick; sets of instructions are handled on a first come first served basis. The multithreading isn't too advanced either: at every clock tick, execution shifts to the next of a maximum of four active threads. This is a great deal simpler than HyperThreading (SMT), where instructions from multiple threads may go into the pipeline together. However, by keeping the design simple, it is possible to integrate eight of them together with a quad channel memory controller and a shared FPU onto a chip, without power consumption going through the roof. The full specifications, compared with those of the Opteron, are as follows:

| | Sun UltraSparc T1 | AMD Opteron (Revision E) |

|---|

| Cores | 8 | 2 |

| Threads per core | 4 | 1 |

| | |

| Prod. process | 90nm | 90nm |

| Clock speed | 1,0 - 1,2GHz | 2,2 - 2,6GHz |

| Nr. of transistors | 300 million | 233 million |

| Size | 380mm² | 194mm² |

| | |

| Pipeline design | In-order | Out-of-order |

| Pipeline length (integer) | 6 steps | 12 steps |

| Pipeline length (float) | N/A | 17 steps |

| Max. instructions per tick | 1 | 3 |

| L1 cache (per core) | 8KB data, 16KB instruction | 64KB data, 64KB instruction |

| L2 cache | 3MB (shared) | 2MB (1MB per core) |

| | |

| Memory controller | 4x DDR2-533 (34,1GB/s) | 2x DDR400 (12,8GB/s) |

| Internal communication | Internal crossbar (134GB/s) | HyperTransport (24GB/s) |

| Nr. of socket pins | 1933 | 940 |

| TDP | 79W | 95W |

Doing some very superficial yet illustrative maths yields the following statistics: the Niagara processor needs 48mm², 38 million transistors and 10 Watts per core, while the Opteron requires 97mm², 117 million transistors and 48 Watts. This means that Sun has come with a unique design that hardly looks like anything made by the competition. Actually, it is valid to wonder if one can speak of competition in this respect: the T1 has a number of properties that put it outside a large share of the market. A lack of FPU power, the impossibility of installing more than one chip per motherboard and poor singlethread performance pretty much guarantee it is no threat to Intel, IBM or AMD. But there are a number of specific areas where Niagara excels, as Sun likes to emphasize on its website. The company also stresses the chip's favourable performance per Watt. Hence, it is about time to take a close look at this type of server, and see how it stands up against the ‘ 'traditional' Opteron configuration.

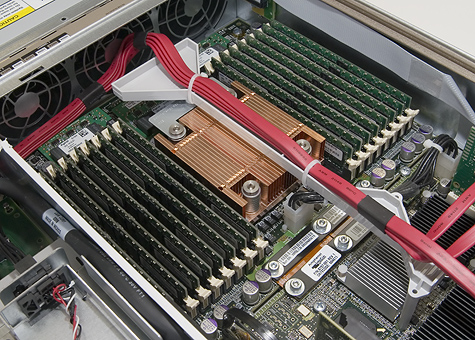

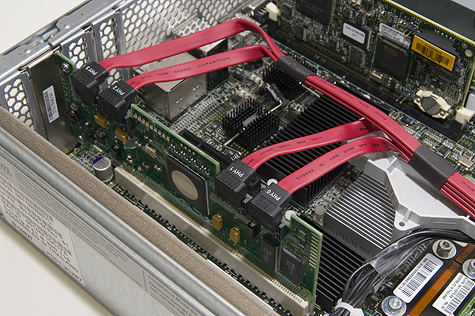

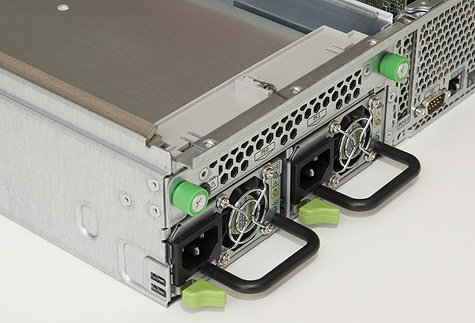

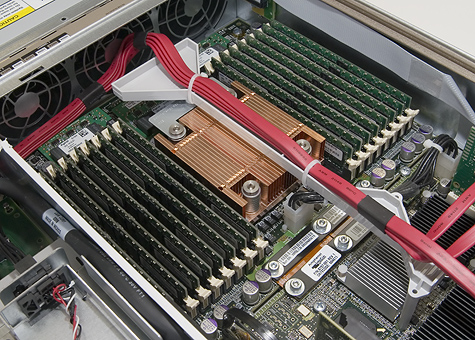

Test candidates: T2000 and X4200

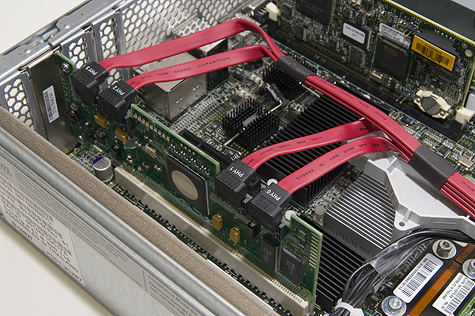

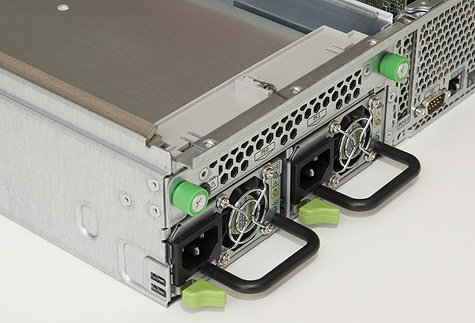

We used two Sun machines in this test: a T2000 and an X4200. Both are 2U rack mounted servers, with the processor being the most significant difference: the T2000's heart is an UltraSparc T1 with eight cores clocked at 1HGz, while the X4200 is fitted with two dualcore Opteron 280 processors at 2.4GHz. Both machines had two Fujitsu 73GB SAS hard disks (2.5", 10,000RPM) at their disposal, hooked up to an LSI RAID controller. Some additional differences should be noted: the UltraSparc had 16GB of DDR2-533 memory on board, while the Opteron had to make do with 8GB of DDR-400. Since tests we conducted showed that the step from 4GB to 8GB hardly yields increased returns given our benchmark, we do not believe that the T2000's 16GB of memory gave it an unfair edge.

A further minor point is that the SAS controller came with the T2000 in the form of a slot card, while the X4200 has it fixed to the motherboard. Additional differences are to be found mainly in the machines' constructions: although both look sturdy and well-finished, Sun has made some slightly different choices for each machine. For instance, the double Opteron needs more cooling for its 190W TDP than the 79W UltraSparc T1. The design of the cooling systems is the same though: cool air is sucked in through the front and blown right across processor and memory. The case lid is designed in such as manner as to make sure that the warmest elements get most of the airflow.

T2000

T2000

X4200

X4200

Remote administration with ILOM and ALOM

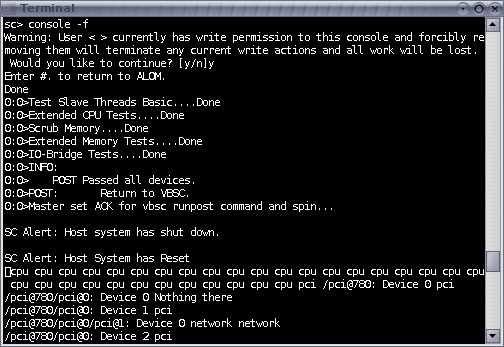

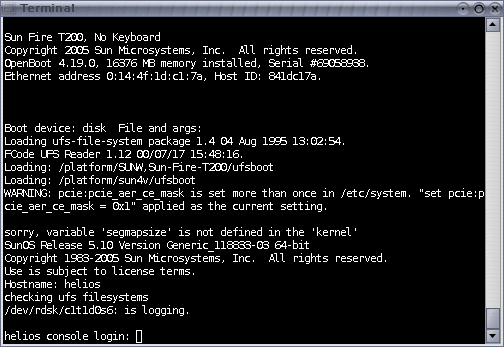

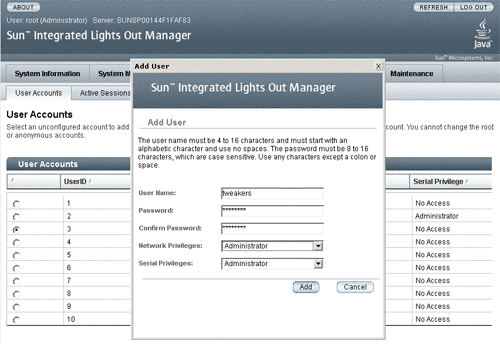

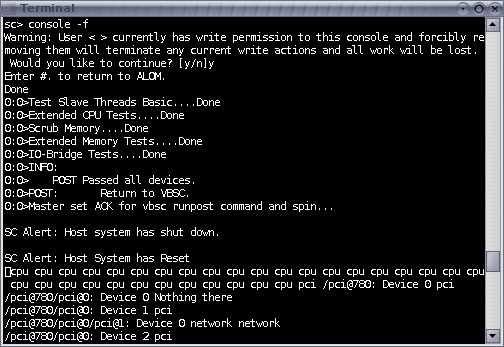

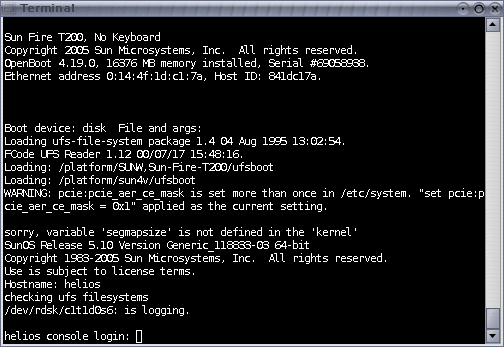

Remote administration is often a must when it comes to servers, and many features in this area offer manufacturers an opportunity to distinguish themselves from each other. At the moment, Sun has two distinct systems: ALOM and ILOM. Both of these allow a great deal of information about the hardware to be accessed as well as the option of sending out messages in case things (appear to) go wrong. Additionally, the Linux or Solaris console can be run, and as a last resort the system can be sent a remote instruction for a hard reset.

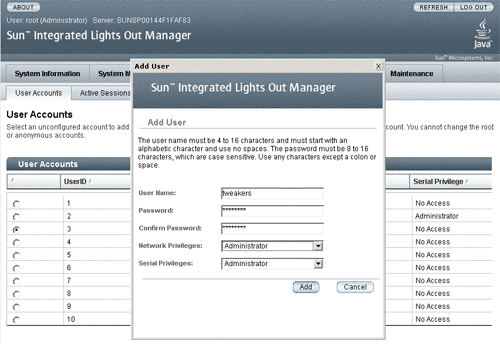

The difference between the two systems lies largely in the way these functions can be accessed: ALOM can only be run via telnet (insecure), SSH (secure), or a serial cable, and is therefore limited to the command line interface. ILOM, on the other hand, can also be accessed via an integrated HTTPS server, meaning that a user friendly graphical user interface is available that can be grasped with greater ease.

Unfortunately, only the X4200 (Opteron) comes with ILOM; the T2000 has to make do with ALOM for now. There is no way around this: the UltraSparc server lacks a monitor connection, and installing a new operating system (or completing the pre-installation of Solaris 10) requires working via another computer. Moreover, the network interface is switched off by default, compelling administrators to get to work with a serial cable at least once. Sun has indicated that this point will be improved. Installation of the X4200 posed no problems: this can be done in a straightforward fashion by using the monitor or the network interface, which is switched on by default in the Opteron.

ALOM

ALOM

ILOM

ILOM

MySQL optimizations

Testing the UltraSparc T1 was not a trivial task: we spent a good three months finding the optimal configuration for our tests, for which we worked together with people from Sun, who in turn worked with people from MySQL. In all, two billion queries were fired, spread out across 3,500 serial runs, which took more than nineteen days to complete. Our research showed that results can vary greatly, which taught us that this machine is not easy to tame. If you want to get it to achieve its maximum potential, you need the right combination of software and settings, which can demand a great deal of patience. We are reasonably convinced that we have done the best that we can do for now, but Sun is still researching our benchmark because the company believes that it can be improved. In response to the problems we found, the company has come up with an improvement for the Sun Studio compiler which may allow considerable gains at a later stage, but we did not want to wait for its release before publishing our results.

Compared to the previous article, a number of things have been changed in the test method. The most visible aspect is the change from 'queries per second' to 'requests per second'. This allows different databases to be compared in a more straightforward fashion, since one may need more queries to build a given page than another. An example is MySQL 4.x, which does not handle sub queries optimally and hence manages to perform faster on greater numbers of simple instructions. An alternative such as PostgreSQL on the other hand, is at its best when handling complex queries, of which fewer will be needed.

Additionally, we have switched to using two client machines to generate the database loads, since during the testing phase for the last review – unfortunately towards the end – this turned out to yield structurally better results. Also, we have added functionality that enables us to switch off cores and/or threads, in order to study the scaling behaviour of the servers.

To demonstrate the major effect that a few minor alterations can have, a graph is shown below with different MySQL 5.x results that we obtained during our research. The number of cores and the amount of memory is kept constant, but in spite of that the scores between the different MySQL versions vary wildly. Settings can also cater for huge differences: for example, a 5.0.18 version that was tweaked by Sun was a good deal faster than our original configuration, although only one parameter had been altered.

My SQL scaling behaviour

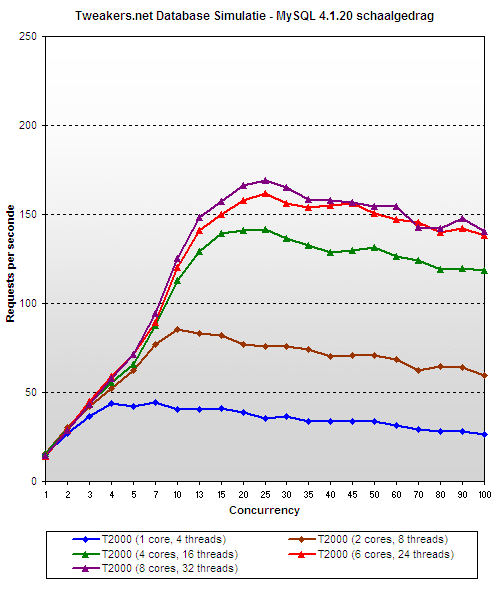

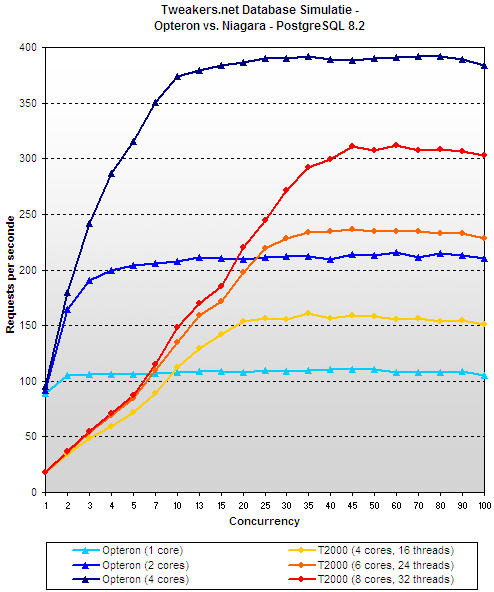

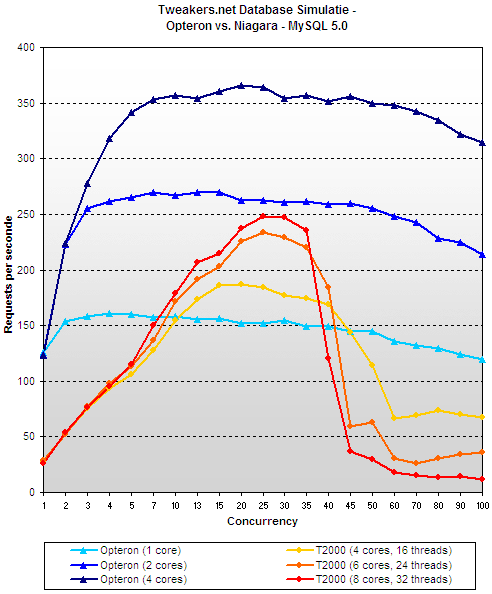

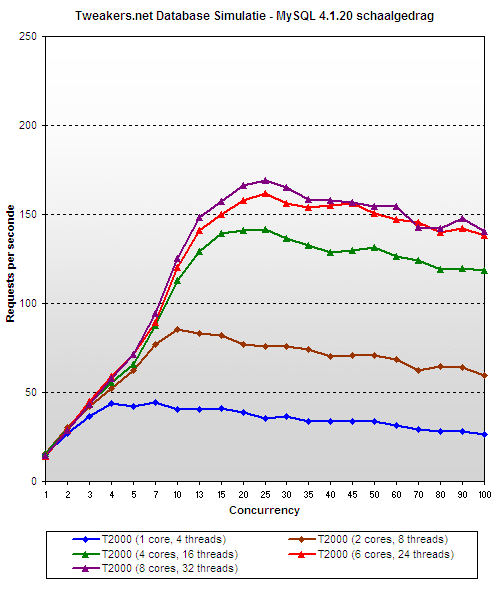

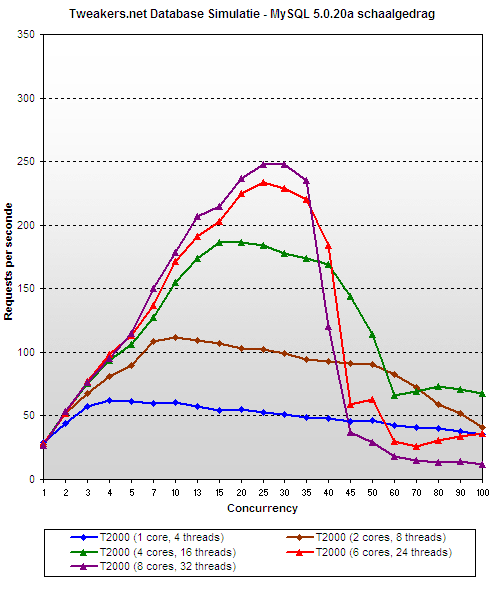

In our previous article we were already forced to conclude that MySQL 4.x does not scale tremendously well with multiple cores or threads, but since it is (still) the version on which our site runs, as well as many others, we wanted to have a look how the software would manage on the UltraSparc T1. As it turns out, the first two steps to two and four cores are smooth, but at the next, from four to six, things begin to disappoint. The final step to eight cores even proves to be barely noticeable in the results. Under heavy site load, doubling the number of cores to two yields 112% of performance gain, going to four cores still makes for an improvement of 78%, but the increase to eight only delivers an extra 18%.

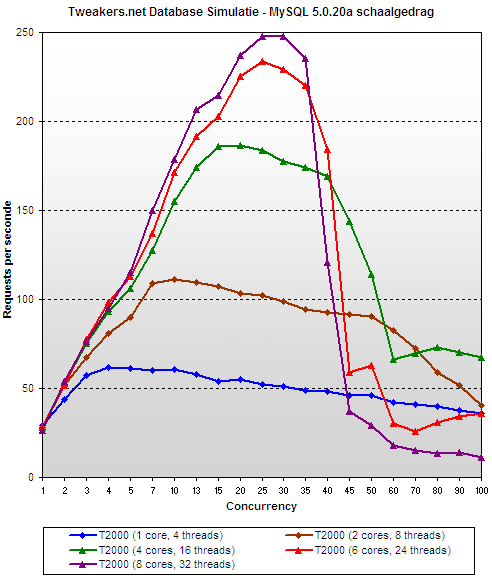

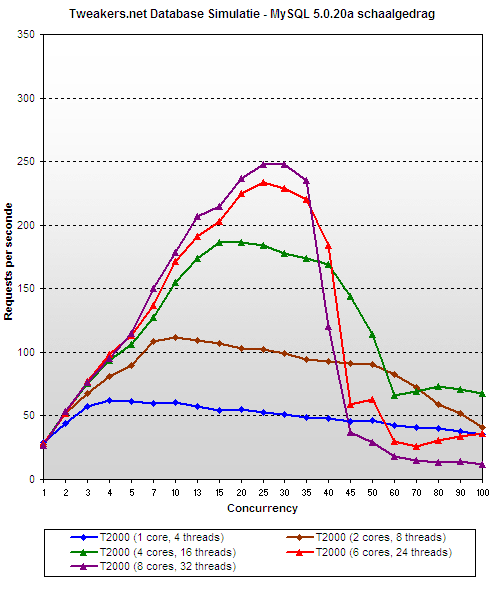

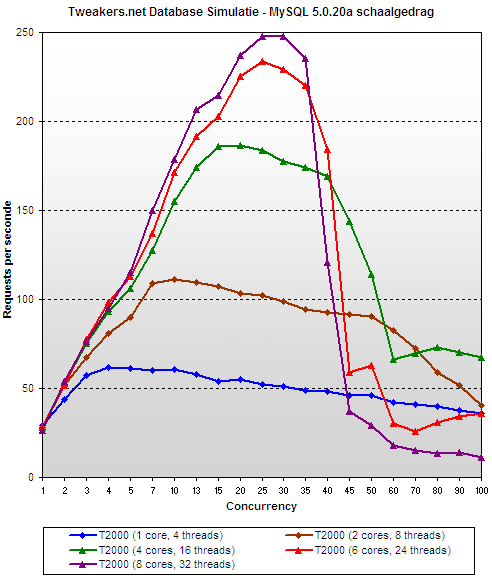

In MySQL 5.0, a great deal of problems relating to scaling behaviour have been addressed, which is noticeable in the form of clearly higher performance peaks. In spite of that the move to eight cores still yields little performance increase, and moreover, some dramatic new behaviour appears to have been introduced. Performance collapses under heavier loads (more than 40 simultaneous users). To make things worse: the higher the peak, the sharper the drop. While for 25 simultaneous users we are still seeing logical results, bizarre situations start to crop up from 50 users and up, where one core turns out to be quicker than eight. The reasons for this are unclear: neither our system administrator nor the experts at Sun and MySQL were able to provide a solution. Experiments with various (beta) versions did not reveal any improvements, which means that for the moment, we shall just have to accept things the way they are.

MySQL vs. PostgreSQL

Even though MySQL is probably the best-know name in the open source database world, a number of excellent alternatives are available. One of them is PostgreSQL, of which the development started in 1986. The project's initial goal was to design a successor of another database that went by the name of Ingres, which explains the name: 'post-Ingres' slowly changed to Postgres and later to PostgreSQL. The current version of the database is 8.1, and it is regarded as being at least as good, if not better, than MySQL. Since the discussion which is 'the best' database is both sensitive and tedious, we avoid it in this article, but it is nevertheless interesting to take a look at how good or bad Tweakers.net runs on PostgreSQL.

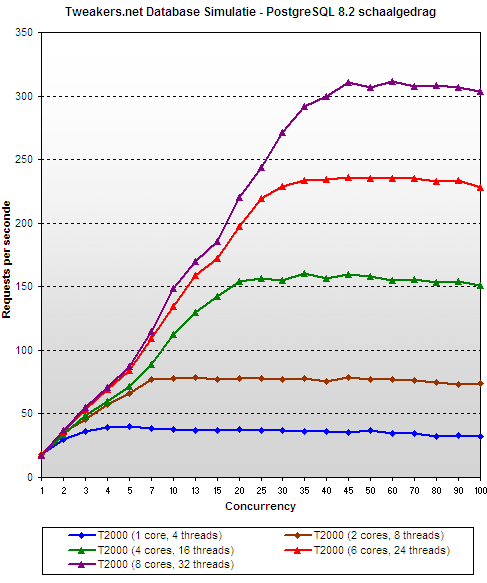

We decided to compile a CVS snapshot of PostgreSQL 8.2 (alpha), as the word is that this version scales better than the current official release. Since we ran a beta version of MySQL 5.1, we figured it would be fair to give PostgreSQL a chance as well. When version 8.2 turned out to run without problems as well as very fast, we felt there wasn't a good reason any more to fall back on the latest release.

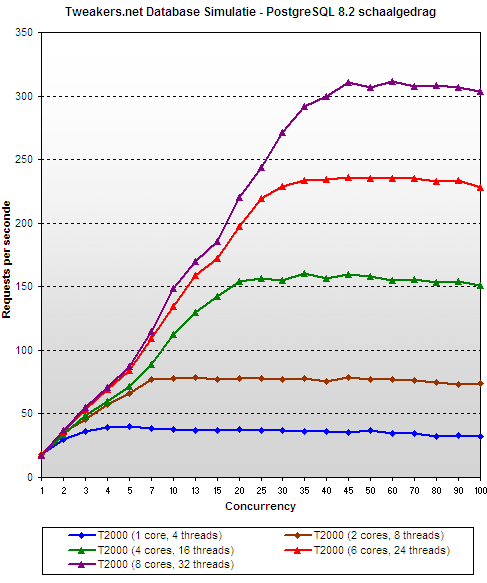

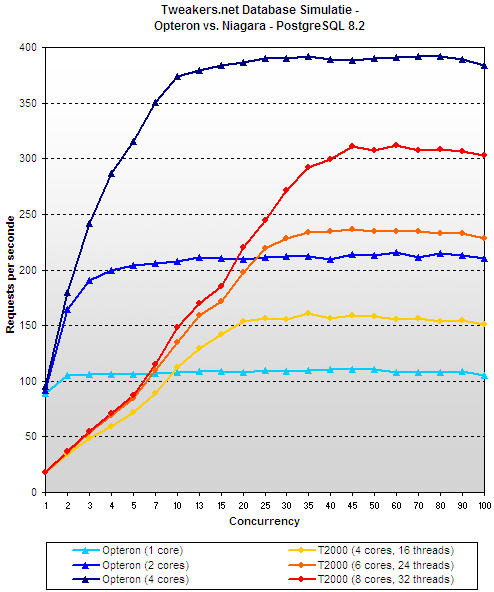

In contrast to all MySQL versions that we tried, PostgreSQL scales almost perfectly. With a load of ten simultaneous users, the step from one to two cores yields on average an performance increase of 114%, going to four cores improves things by 96%, and the increase to eight cores adds another 77%. This means that eight cores deliver 7.4 times as much as a single core. Another relief in comparison to MySQL comes in the form of stable performance after the maximum is reached: collapses as we saw in MySQL when the loads exceed the servers capacities do not occur. When we only take the heavy loads at the end of the graph into consideration, the lines appear to flatten out, which means that the scaling behaviour is even better: the gains of the core doublings to 2, 4, and 8 are respectively 122%, 104% and 98%, in other words, the performance between one and eight cores differs on average by a factor of nine.

PostgreSQL might be called a textbook example of a good implementation of multithreading, making it the ideal application for the UltraSparc T1 to show what it's made of. As long as this does not require too much computing power, but software is capable to split itself effortlessly into independent parts, the T2000 is in its element. The MySQL 5.0.20a graph shown below (the same as on the previous page except for the scale adaptation) illustrates the contrast once more, and demonstrates the importance of not just having multithreading in the software, but also a well-scaling implementation of it.

It took a lot of trouble to make our benchmark suitable for PostgreSQL: Tweakers.net’s regular code is highly optimized to satisfy the 'unique personality' of MySQL 4.0. A direct copy of the database and the queries lead to hopelessly bad results, so in order to give PostgreSQL a fair chance, indices were replaced, sub queries were applied, and particular joins were rewritten. This re-optimization yielded an improvement by a factor of four. Still, the effort was far short of the work done over the years to get MySQL to behave properly, which makes us suspect that more performance can be dragged out of PostgreSQL.

Sun UltraSparc T1 vs. AMD Opteron

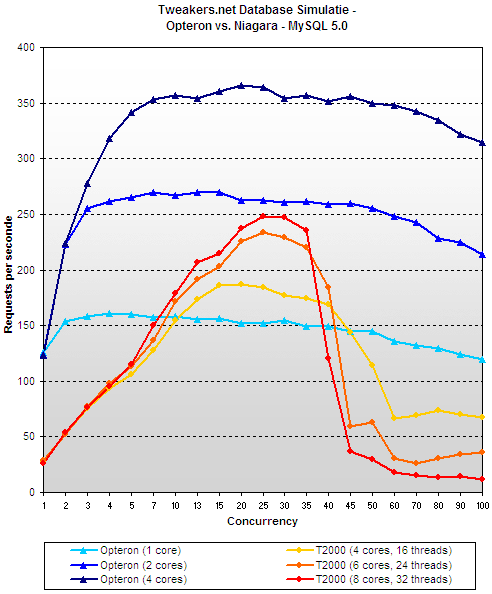

We have seen that the Sun's UltraSparc T1 – provided it is loaded with the right software – is able to scale well. This is a nice fact for demonstrating the underlying philosophy, but without comparisons it does not say an awful lot about the processor's potential. Are 32 slow threads quick enough to beat two or four fast cores? To answer that question, we ordered an X4200, with two 2,4GHz dualcore Opterons, beside the T2000. In order to take the operating system out of the equation, we installed Solaris 10 on the X4200 as well. A difference was that the Opteron 'only' had 8GB of memory (compared to the 16GB in the T2000), but as indicated before, everything above 4GB has little influence on the performance in this benchmark.

It is immediately clear that the Opteron not only performs clearly better than the UltraSparc T1, but also that it reaches its peak sooner. The X4200 is at full steam from about ten simultaneous users, while the T2000 does not reach its full potential until about forty of them hook up. When we scale things down, the two Opteron cores have little difficulty beating the four Niagara cores. We have confirmed this both by switching off a single core in both Opterons, and by turning off one of the processors completely. The difference in performance was minimal in both cases.

In MySQL, this picture crops up again, with the exception that things look worse for the T2000 because of the poor scaling behaviour. Remarkably enough the Opteron's performance does not collapse under heavy loads, although it doesn't get better after a certain point. In any case, in MySQL the two Opteron cores beat the eight Niagara cores in all cases. The disappointing impression left by MySQL hardly makes a good case for the philosophy of simplifying cores in order to stick as many as possible on a chip. Having said that, there's people out there who have had success in their efforts and have made MySQL fly on the UltraSparc T1, but apparently our load patterns are not suited to it.

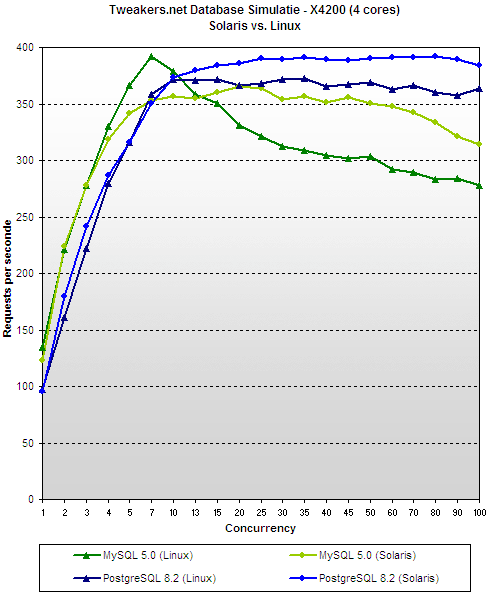

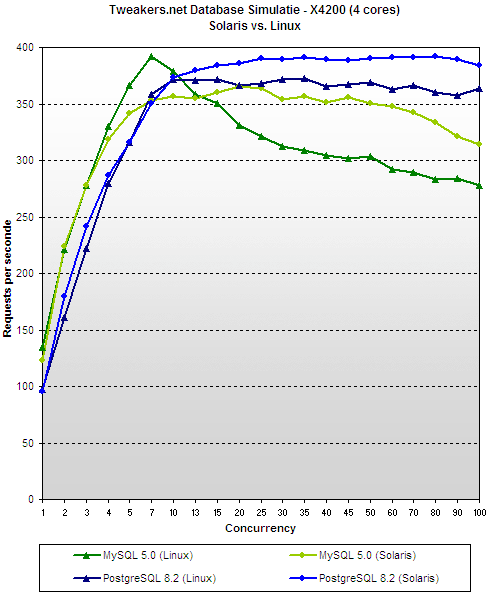

Solaris vs. Linux

The only factor missing from the comparison on the previous page is the operating system. The UltraSparc T1 server of this review was tested with the latest version of Sun's own Solaris 10. To keep things fair, we installed the same OS on the Opteron, but curious as we are, we wanted to know the difference in performance between Solaris and Linux. We picked Ubuntu server edition, but did not succeed getting it to work on the T2000. This means that we only have results for the Opteron, but these tell an interesting story by themselves: concurrencies of 15 and above made Solaris between 6% and 14% quicker than Linux, running PostgreSQL and MySQL, respectively. Quite remarkably, the latter does show a higher performance peak running Linux.

For completeness, it should be noted that we did not succeed in getting the X4200's integrated SAS-controller to work under Linux, so instead we used a separate Areca 1120 card with a couple of Raptors. According to our disk expert Femme this set-up ought to perform better, but since no RAID was used and the system had 8GB of memory (with disk activity already very low on 4GB), we believe its influence will have been minimal.

Power usage, prices, and conclusion

Unfortunately, we have little choice but to be disappointed in the UltraSparc T1's performance: even the perfectly scaling PostgreSQL allows the machine to be very convincingly overtaken by the 'average' Opteron server, costing just below half its price. It can only be hoped that the T2000 manages better in other situations, with performance gains that are large enough to justify the difference in price., since otherwise the radically designed chip is in danger of being trampled by competitors that with a more gradual and conservative approach to the switch to multicore architecture. It has to be said though that a plus of the T2000 is that it is very energy efficient: full loading only pulls 322W out of the mains, while the Opteron needs about 50% more, doing the task. When we look at the performance per Watt in PostgreSQL, we measure peaks of 1,17 requests per second per Watt for the Opteron, and 1,34 requests per second per Watt for the T2000; which translates to an advantage of about 15% for the Niagara. Sun also wants to take the server's height into consideration in its own SwaP measure, but since both of them were 2U, this does not change the picture.

|

| Energy consumption |  |

|

| Sun X4200 (4 cores, 2,4GHz) - load |    341 341 |  |

|

| Sun X4200 (4 cores, 2,4GHz) - idle |    274 274 |  |

|

| Sun T2000 (8 cores, 1GHz) - load |    232 232 |  |

|

| Sun T2000 (8 cores, 1GHz) - idle |    223 223 |  |

|

|

| Prices in euros (source: Sun.nl, 16-7) |  |

|

| X4200 (4-core, 2,4GHz, 8GB) |    6800 6800 |  |

|

| T2000 (4-core, 1GHz, 8GB) |    8300 8300 |  |

|

| T2000 (6-core, 1GHz, 8GB) |    10900 10900 |  |

|

| T2000 (8-core, 1GHz, 8GB) |    13400 13400 |  |

|

It is important to realize that we have tested only one application: the Tweakers.net database. That the T2000 is not an ideal choice for that, does not mean that the machine can be dismissed: it may well do a good job as a web server. Sun did break several records with the T2000, and so applications can be conceived for which it is better suited. The most important conclusion to be drawn is that purchasers of this server need to be very careful what they want to use it for, since in case the intended application happens to be unsuitable, buying a UltraSparc T1 can be an expensive mistake. Such risks are considerably smaller for regular Opterons or Xeons. Fortunately, Sun understands this only too well, and hence the company is very helpful to people wishing to give the T2000 a try.

The future

The future

The UltraSparc T1 is only the first in a new line of Sun processors, and if the company's roadmap is to be believed, a Niagara II baked using a 65nm process will appear next year. Rumour has it that this chip will be able to handle twice as many threads, clock somewhat higher (a maximum of 1,4GHz) and have its own FPU for every core instead of sharing them. Moreover, instead of direct DDR2 support, the talk is that there will be a switch to FB-DIMM. The year 2008 would see the appearance of Rock, which is supposed to offer improved singlethread performance. According to the rumours, Sun has invented a very interesting way to handle multithreading flexibly. In principle, sixteen 'cores' are meant to get to work on two threads, but the cache is to be shared by groups of four: every quarter of the processor would get 64 KB L1 and 2MB of L2. It is said that the cores do not just share their caches, but also unused computing power. This would be like having four 'super cores' that each handle eight threads.

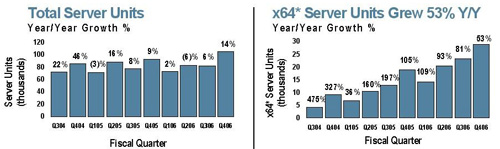

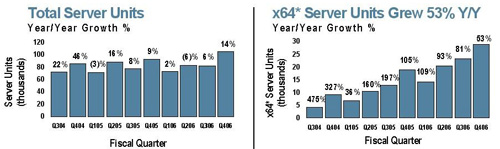

Even though Sun has innovative ideas for a future processor, it did take a risk by choosing this path: the chip really isn't quite as versatile as the x86 competition, and cannot participate either in the higher market segments that are the domain of the Power and Itanium processors. Having said that, the design has some unique benefits, which will need to translate into high sales figures to compensate the rest. At the moment, the UltraSparc IV+ is guarding the fortress, but the cancellation of its proposed successor means that Niagara will have to make it on its own. So far, sales figures have not been spectacular: in a recent presentation accompanying financial results, Sun indicated that it had sold slightly more servers, but a much sharper rise of Opteron sales reveals that Sparc sales are still on the way down. At the moment, Niagara generates returns of 100 million dollars per quarter, on a total of 1.3 billion in the area of servers.

Sun will have to dedicate a great deal of attention to the Niagara design in order to make it more versatile, but as of late it appears to be just cutting costs in its processor department. The cancellation of the UltraSparc V meant the loss of five hundred jobs; another sixty defected to AMD, and recently a further two hundred jobs were cut, among them people who were working on the Rock coprocessors. After the resignation of Scott McNealy, the new CEO Jonathan Schwarz announced another four to five thousand jobs are to go at Sun. It is unclear to what extent this will concern the chip department. Disaster will probably not hit the company though, in case it does not score with Niagara and its successors: it struck a deal with Fujitsu last year for the sale of servers with the Sparc64 processor. The x86 might also take a share of the burden: Transitive (the company that makes the PowerPC emulator that Apple uses for its Intel Macs) recently released software for running Sparc applications.

We hope that Niagara manages to grab a sufficient share of the market to justify further development of the processor. Diversity in the market is good for consumers, and Sun could become one of the few companies to battle the dominance of the x86-design. Having said that, we do wonder to what extent Sun is prepared to put up a fight: will it do whatever it takes to make the chip a success, or will the concept fall prey to cost cutting if it does not make money quickly enough? According to Sun itself the company is determined to see it through: the roadmap continues beyond 2010.

Acknowledgements

Acknowledgements

Tweakers.net would like to thank the following people: Bart Muyzer and Hans Nijbacker (Sun Netherlands) for their cooperation with this article and their efforts to improve the benchmark results; Jochem van Dieten (database consultant and regular visitor of our forum) for his help with PostgreSQL; ACM and moto-moi for running the tests and for their help processing and interpreting the results; and Mick de Neeve for the English translation.

Over three years ago, Sun started releasing information on a rather precocious new concept for server processors. The idea was, instead of trying to accomplish a single task very quickly, to do a lot of them at the same time and achieve a decent nett performance that way. The first result of this drastic change of direction is the UltraSparc T1 'Niagara', which was introduced at the end of last year. In this article, we dissect the vision behind this processor, look at the servers that Sun built around it, and most of all, we shall test to what extent this concept is useable for a website database like the one used here at Tweakers.net. A 'traditional' server with two dualcore Opterons will be used for comparison. Additionally, we shall capitalize on the opportunity and look at the differences between MySQL and PostgreSQL on the one hand, and between Solaris and Linux on the other.

Over three years ago, Sun started releasing information on a rather precocious new concept for server processors. The idea was, instead of trying to accomplish a single task very quickly, to do a lot of them at the same time and achieve a decent nett performance that way. The first result of this drastic change of direction is the UltraSparc T1 'Niagara', which was introduced at the end of last year. In this article, we dissect the vision behind this processor, look at the servers that Sun built around it, and most of all, we shall test to what extent this concept is useable for a website database like the one used here at Tweakers.net. A 'traditional' server with two dualcore Opterons will be used for comparison. Additionally, we shall capitalize on the opportunity and look at the differences between MySQL and PostgreSQL on the one hand, and between Solaris and Linux on the other.![]() Background: the problem

Background: the problem Not only memory is a bottleneck, code does not tend to be very cooperative either. Instructions are usually dependent on each other in one way or another, in the sense that the output of one of them is necessary as input for the other. Modern cores can handle between three and eight instructions simultaneously, but on average you may call yourself lucky if two can be found that can be executed wholly independently. Often, only one can be found, and regularly there are no instructions at all that can be sent safely into the pipeline at a particular moment. Hand-optimized code is probably better in many cases, but not everything can be fixed with better software: for most algorithms there are clear practical and theoretical limits.

Not only memory is a bottleneck, code does not tend to be very cooperative either. Instructions are usually dependent on each other in one way or another, in the sense that the output of one of them is necessary as input for the other. Modern cores can handle between three and eight instructions simultaneously, but on average you may call yourself lucky if two can be found that can be executed wholly independently. Often, only one can be found, and regularly there are no instructions at all that can be sent safely into the pipeline at a particular moment. Hand-optimized code is probably better in many cases, but not everything can be fixed with better software: for most algorithms there are clear practical and theoretical limits.![]() Explosion of complexity

Explosion of complexity