Introduction

Note: Links appearing this article point mostly to articles in Dutch. In many cases, you can find the information that is referred to in English by clicking on the link labeled 'bron' ('source') at the top of the page to which you are taken.

Since the de introduction of AMD’s Opteron in April 2003, Intel's main competitor has seen its share in the market for x86 servers grow from a lousy three percent to more than a quarter. There were good reasons for this growth: the K8 design proved to be faster for a good deal of applications and also turned out to be more efficient and scalable than its competitors. In addition, the Opteron lead the field with techniques such as 64-bit extensions and dualcore technology, leading reviewers to conclude time and again that Intel's Xeon was lagging behind. Needless to say, this was painful for the chip giant, but also ample motivation to make some drastic changes. Over the last few years, the company has worked hard on a new generation of server chips, under the codename 'Woodcrest', and finally released the result in the form of the Xeon 51xx series. In this article, we look at what Intel did in order to turn the tide and to what extent its efforts can be called successful.

Like in previous articles in this series, we utilize our own benchmarks, which we developed on the basis of database queries executed to serve this site to our audience. The main subject of this review is a dual 2.66GHz Fujitsu-Siemens Woodcrest server, which is pitted against a Socket F Opteron with DDR2 memory that we reviewed earlier. The CPU of the Woodcrest's competitor is certain to remain AMD's showpiece up to the second half of next year. For completeness, we shall also compare Intel's new Xeon to two other chips which we reviewed earlier: the Socket 940 Opteron and Sun's UtraSparc T1.

Intel's Xeon 51xx 'Woodcrest'

During the past eighteen months, Intel has been presenting itself not so much as a chip maker, but rather as a solution provider for entire platforms. Consequently, along with the new server processor, the company introduced an accompanying chipset, under de codename 'Blackford', which is meant to end the bandwidth problems that the Xeons of the previous generation were struggling with. To get an idea to what extent performance gains are due to the new processor and what part is a result of the new chipset, we also test the Xeon 50xx 'Dempsey', i.e. the last model that was based on the previous Netburst-philosophy.

Post mortem: Netburst

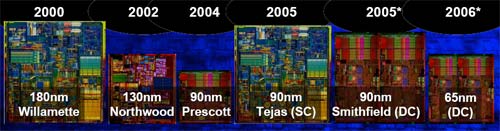

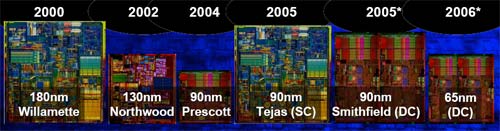

Intel's Netburst architecture spent pretty much its entire career as a subject of controversy. The first incarnation, the Pentium 4 'Willamette', was introduced on November 20, 2000, clocked at speeds up to 1.5GHz. This appeared to render a quantum leap possible in comparison to the 1.0GHz Pentium III 'Coppermine' and the 1.2GHz Athlon 'Thunderbird', but it did not take the world long to find out that clock speed does not tell the whole story. Not only was the chip's performance disappointing, it was also a bit of a financial burden for Intel as well as for its customers. The Willamette's core was twice as big as its predecessor and could only be used in combination with Rambus RDRAM memory which was in short supply and expensive. An early socket substitution, taking out the 423 pin sockets and putting 478 pins into the field, did not help to raise the CPU's popularity.

A little more than a year later, on January 7 2002 to be precise, the second generation of Netburst was born, bearing the codename 'Northwood'. This period saw an ironing out of Willamette's rough spots, in part by virtue of the introduction of chipsets with support for DDR-memory, HyperThreading, a larger L2 cache and broader acceptance of the SSE2 instruction set. Intel's strategy of focusing on high clock speeds even began to pay off : when the frequency gap with AMD grew to more than 800MHz, it caused the smaller chip maker to financial losses. But all was not rosy on Intel's side of the field, as energy consumption began to rise rapidly. Northwood started out at 2.0GHz with a TDP of 54.3 Watt, but by the time the CPU was clocking 3.06GHz this had reached 81.8 Watt – a staggering consumption rate for those days.

Fortunately a solution was within reach, or at least this was believed. The transition of the production process from 130nm to 90nm was intended to reduce power consumption by such an extent that a sprint towards clock speeds of 5.0GHz would be possible. It was the Pentium 4 'Prescott' core that was meant to pull this off, in part by utilizing a long pipeline of 31 steps. However, as it turned out, it was at this point that the Netburst-architecture collapsed: because of unexpected amounts of leakage from the small transistors, the intended power savings did not materialize. Even though this played a role in all processors (including those of Intel's competitors), the Pentium 4 turned out to be particularly susceptible to this side effect: higher clock speeds put more pressure on the transistors as well as more voltage, causing increased leakage. Moreover, the effect is self-reinforcing: as the chip heats up, even more leakage occurs.

All in all, the effects for Intel were substantial. When the Prescott was launched on February 2 2004, the new core did not supply Intel's customers with any gains in performance or loss in power consumption. Instead, the resistance against even warmer chips turned out to be the death blow: in spite of reasonable success in making the design more energy efficient, the initial targets could simply not be met. Intel could not have chosen a worse moment for this misfortune, since AMD had only recently introduced the Athlon 64, with which it put a powerful answer to Northwood on the market, in terms of performance as well as power consumption. Left without the ability to take refuge in high clock speeds, the Pentium 4 had become an easy prey, and Intel was forced to watch its lead fade away.

Intel's then-CEO on his knees to apologize for not achieving the 4GHz mark

With hindsight, it is easy to say that it was clear at that point that Intel had outplayed its hand, but it took the company a while to accept that Netburst was a dead end. On May 8 2004 it was finally announced that work on two Pentium 4 successors (the projects codenamed Tejas and Nehalem) had been canceled, and that Intel would tackle things 'differently' in the future with 'dualcore' technology. Rumors were flying across the net in abundance, one of the more popular ones being that Intel would opt to develop the Pentium M's design further. This mobile processor had already been under development for a few years by an Intel team in Haifa, Israel, independently from the Netburst project. In spite of the fact that this chip had not been designed for desktops, let alone servers, it managed to perform impressively well. Besides, the Israeli Intel engineers had succeeded in avoiding the pitfall of ever increasing power consumption.

So an efficient 64 bit, dualcore Pentium M, codenamed 'Merom', had been under development even before the Netburst-architecture derailed. According to unconfirmed reports, Merom's development started as early as 2002. Still, it was not until April 9 2005 that Intel officially announced Merom's double offspring: Conroe for desktops and Woodcrest for servers. Together, they were known as NGMA: Next Generation Micro-Architecture. Another year went by before the company announced the final name for the new architecture: Core. On the next pages, we shall look at the improvements that it has to offer.

The Core architecture (1)

Before we delve into the ins and outs of the Core, it is useful to consider what sort of processor it is. According to Intel it is a mix of the best of the Pentium 4 and the Pentium M, but it does not require a PhD to see that the overlap with the latter is much larger than with the former. Effectively, only features of Netburst (such as the 64 bit extension) have been used, but not a brick of that chip's design was left standing. Whether it can be classified as Pentium M offspring depends on the level of analysis. It was designed by the same team – the characteristics make it clear that the architects did not forget their experiences working on earlier projects. The Core is clearly based on a mobile philosophy, but closer examination betrays the presence of a few striking new features, which suffice to justify characterizing the design as new.

Wide Dynamic Execution

Wide Dynamic Execution

The Core is built to decode, execute and process up to four instructions per clock cycle. Other x86 chips such as the Pentium 4, Pentium M and the Athlon 64 have a maximum of three. Although it is hard in practice to find instructions within a single thread that can be executed independently, every improvement of the average is welcome. To maximize its potential, Core utilizes 'fusion': two accompanying instructions will be merged together by the hardware. That does not suffice to bring the workload down, but it nevertheless leads to slightly better efficiency since less bookkeeping needs to be done.

Fusion takes place at two levels: that of the processor's internal instruction set (microfusion) and of the external set (macrofusion). The Pentium M can also do microfusion, but the technology has been improved for the Core to allow for more combinations. Intel says that microfusion takes ten percent off the processor's instruction load. Macrofusion operates directly on x86 instruction execution requests, and eliminates unnecessary complexity, for instance by merging a 'compare' operation and a 'jump' operation into a single 'compare and jump'. Note that macrofusion is not applied in 64 bit mode, which is possibly due to an assumption that modern compilers do not generate unnecessary instructions.

Smart Memory Access

Smart Memory Access

One of the most innovative features of Core's architecture is Memory Disambiguation. To guarantee the correct execution of a program, instructions must be processed in the right order. Or, at the very least, it should appear as if the instructions are handled in the correct order. For a number of years, processors have been around that are capable of 'covertly' crunching away instructions in a different order to achieve performance gains. The first x86 chips which applied this principle were the AMD's K5 and Intel's Pentium Pro. For these so-called OoOE-architectures it is of paramount importance that the appearance of sequential processing is kept up. After all, it is undesirable, to say the least, to have a processor so intent on executing instructions that it ends up working with data that needs to be altered before it is operated on.

In practice, it is hardly ever necessary to execute all instructions in the exact order in which they are stated in the program source. One of the tricks in Intel's hat (which is also on AMD's K8L repertoire) is to execute read actions before it is their turn, making the data available quicker. Write actions are somewhat more troublesome: if there is a store instruction in the pipeline with an unknown target, the CPU cannot risk reading something in the mean time. Programmers and compilers avoid writing and rereading the same data for the sake of efficiency, but a processor's job is to get the correct results with all possible code, including suboptimal code. So far, this has been ample reason to block read actions until all preceding write actions have been handled.

The Core's Memory Disambiguator solves this issue by predicting the target of write actions and giving the go-ahead to any read instructions that are considered safe. The result is less average waiting time for instructions while the CPU can do more work in the same time span. The accuracy of the Disambiguator is allegedly 90%, so it frequently goes to work on the wrong data. When this is noticed, and it always is before the results of code execution are finalized, the processing simply starts again. The system is somewhat comparable to branch prediction, which involves guessing at which branch of an if-construction program executions is to continue. The guesses are often good resulting in increased efficiency, but sometimes extra work is necessary to undo the damage of a miss. Naturally, there should be a net positive result in this, so too many errors on the part of the Disambiguator means it gets disabled for the remaining part of the thread.

The Core architecture (2)

Advanced Smart Cache

Advanced Smart Cache

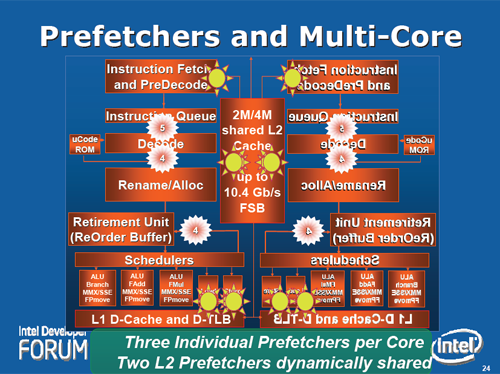

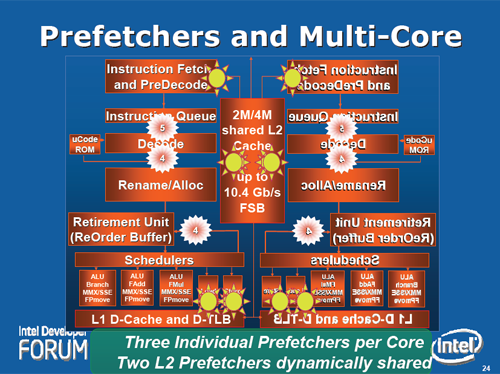

The Core has a shared L2 cache, which comes in 2MB or 4MB depending on the edition of the CPU. The processor cores can each access the data that the other one has requested, reducing the average waiting time in case the cores are both working on the same task. Cache capacity is dynamically distributed among the cores, so if needed, one thread can take the full blow. Cache sharing also decreases bus bandwidth, since internal communication can be handled via the L2. Incidentally, the cores' L1 caches are also connected, but Intel has so far declined to divulge the reason for that.

A dualcore Core-processor has a total of eight prefetchers on board, working together with the large cache at lowering CPU latency. Each core has three prefetchers at the L1 level: two for data and one for instructions – the remaining two are shared in the L2. The aim of using multiple prefetchers for the same cache lies in this construct's ability to recognize various access patterns. In contrast to older designs the Core's prefetchers check whether the data that they put into position actually ends up serving a purpose - in order to avoid putting unnecessary load of the bus while reducing the amount of useful data that accidentally gets pushed out of the cache. Other than that, read instructions from the source code get priority over the ones from the various prefetchers, which minimizes the risk of performance degradation due to overly enthusiastic prefetchers.

Although not having an integrated memory controller gives the Core a higher latency than the K8, the combination of cache and prefetchers is so good that it even fools various latency benchmarks that were specifically designed to circumvent primitive prefetchers. The only drawback to prefetchers is that they can get so active that they drive up power consumption. This is the reason Intel has provided an option to set a level of aggressiveness of the prefetchers, and has given the mobile Merom the mildest default settings while putting the server chip Woodcrest in the heaviest configuration.

Intelligent Power Capability

Intelligent Power Capability

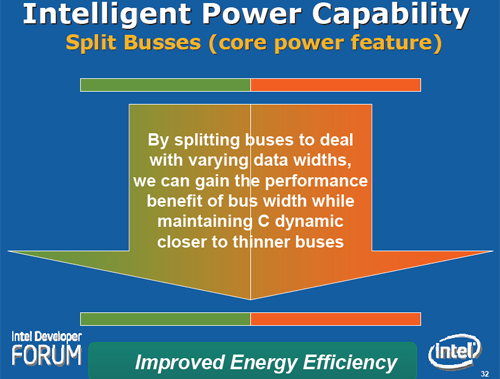

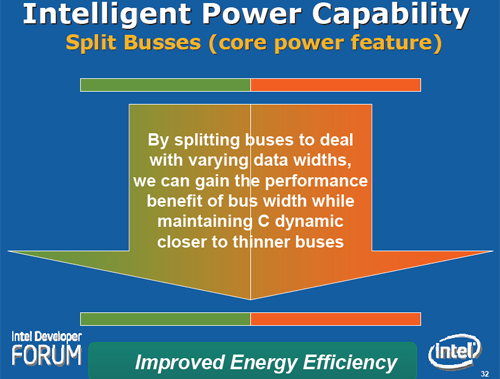

The efficiency of the Core in comparison to its predecessors is not only due to the 65nm-production process. but is largely the result of clever tricks: just about every part of the core can be switched on and off. The surface area is partitioned into several main areas that are only active when they are in use. Certain components such as caches, busses and buffers can even be partially switched off. A disadvantage of switching off components is that it takes time to fire them back up, driving response time up and performance down. To solve this problem, Intel's engineers have designed a system that predicts when a particular part of the chip needs to be active, so that these are always ready in the nick of time.

Advanced Digital Media Boost

Advanced Digital Media Boost

The Core is the first CPU capable of processing 128 bit SSE instructions in one go. Earlier chip designs had these partitioned into two 64 bit sections, which is an extra clock tick in any case, but also requires more bookkeeping. The wide data paths for multimedia cater for a maximum of four 64 bit flops per clock tick per core, which is twice as much as what Netburst and K8 can handle. Finally, eight new multimedia instructions have been added under the header SSE4, which are to aid specific applications in achieving performance gains. However, Intel has paid so little attention to this that we do not consider it likely that this is anything spectacular.

Blackford chipset

Beside the dead end of the Netburst philosophy, there was an additional problem for Intel's server processors: severe lack of bandwidth. The E7520 'Lindenhurst' chipset, the previous top-of-the-line model for two sockets, had just a single 800MHz bus, meaning that only 1.6GB/s would be available for each core in a dualcore machine. Two months before Woodcrest was announced, Intel improved this situation significantly with the introduction of the Blackford chipset. The double bus and four memory channels meant that even the first version could deliver 4.3GB/s per core. The gates were opened further for the Woodcrest, by upping the bus frequency from 1066MHz to 1333MHz. This means that today, every core has triple the amount of bandwidth at its disposal as compared to the beginning of this year.

| | Paxville | Dempsey | Woodcrest | Socket 940 | Socket F |

|---|

| Bus frequency | 800MHz | 1066MHz | 1333MHz | - | - |

| Number of busses | 1 | 2 | 2 | - | - |

| Bus bandwidth | 6.4GB/s | 17.1GB/s | 21.3GBs | - | - |

| Memory | DDR2 | FBD | FBD | DDR | DDR2 |

| Number of channels | 2 | 4 | 4 | 4 | 4 |

| Frequency | 400MHz | 533MHz | 667MHz | 400MHz | 667MHz |

| Memory bandwidth | 6.4GB/s | 17.1GB/s | 21.3GB/s | 12.8GB/s | 21.3GB/s |

| Bandwidth per core | 1.6GB/s | 4.3GB/s | 5.3GB/s | 3.2GB/s | 5.3GB/s |

The table shows that in theory, Woodcrest and Socket F have the same amount of bandwidth. However, in practice, there are differences in effectively available bandwidth. Opterons have a decentralized architecture (NUMA) which means that each processor has two channels for itself and can only access the remaining memory via its neighbour. Internal communication proceeds via a HyperTransport link that delivers 4GB/s in each direction. When, in the worst case, a chip just needs the data 'over on the other side', the effective bandwidth drops to 2GB/s. For this reason, it is crucial that operating systems ensure that threads are given out on such a basis that they end up being executed close to their data, something which is not always easy to achieve.

There are also pitfalls in Intel's system. In every system with multiple sockets it is essential that the processors' caches remain synchronized. After all, a core must not perform computations using cache data that has been altered by another core. There are various ways to guard this so-called 'cache coherence', but all of them share the need for internal communication. AMD has this communication proceed via HyperTransport, so that there is no negative effect on local memory bandwidth, but Intel sends the communication data through the bus, which means that not all bus capacity is available for the memory.

The 5000P 'Blackford' chipset

Woodcrest does take the burden out of the coherence traffic to a substantial extent compared to Paxville and Dempsey by having two cores share a single cache. This takes away the need to use the bus for internal communication within a single socket. In the coming 'Clovertown' quadcore, consisting of two dualcores, the two chips in the socket will probably have to communicate via the bus, but at least the chipset will be able to avoid making the processor in the other socket wait for this.

Blackford comes in two flavours, 5000P and 5000V. The latter is a somewhat cheaper version that has some limitations in maximal memory capacity and other features. It supports two channels with a maximum of 32GB, instead of four channels and 64GB. So-called 'memory mirroring', which stores data twice so as to notice mistakes, is supported by the 5000P but not by the 5000V. A similar feature called 'memory RAID' is present in both; this also duplicates data, for the purpose of being able to restore it in case a chip or module fails. Finally, there is a third chipset, the 5000X 'Greencreek'. It has specifications largely overlapping those of the 5000P, but has a PCI Express x16 slot in order to connect a decent video card and a workstation-optimal memory controller. This offers a somewhat higher bandwidth, but that goes at a cost of slightly higher latency.

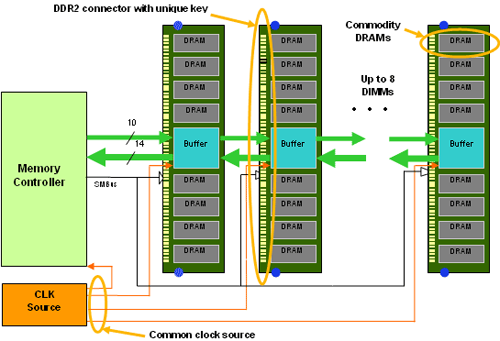

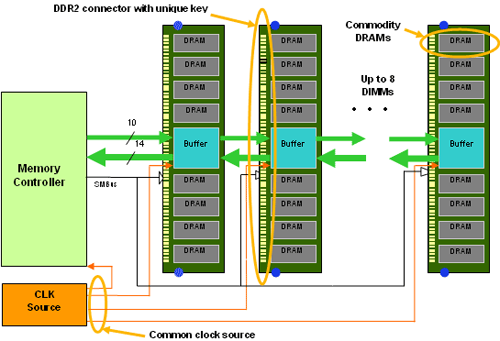

DDR2 vs. FB-DIMM

The Blackford and the Creek are the first chipsets to use a new type of memory module that was designed by a large group of companies, lead by Intel and IBM. Contrary to an ordinary DIMM, a so-called FB-DIMM does not use a parallel bus to send data, but a serial P2P connection. One of the main reasons for this is that it has turned out to be difficult to upscale a parallel bus to high speeds and large numbers of modules. DDR2, for instance, allows a maximum of four modules to a channel at 400MHz and 533MHz, but no more than two per channel at 667MHz and 800MHz. It has been predicted that next year, with DDR3, things will get to a point that only one module can be used per channel. This is not a favoured situation from the server perspective, because it limits capacity and more expensive modules are required to achieve a certain number of gigabytes. FB-DIMM supports a comfortable eight modules per channel, thereby eliminating this problem.

A second advantage of this method is that the controller is no longer required to talk directly to the memory chips, but only needs to converse with the buffer chip (AMB - Advanced Memory Buffer). This means that the memory controller no longer needs to know which chips are used on the other side of the buffer. At the moment, all available FBD-memory uses DDR2-chips, which can be painlessly replaced by a different kind, such as DDR3 or something more exotic like XDR. Such a switch will no longer require changing the motherboard or sockets.

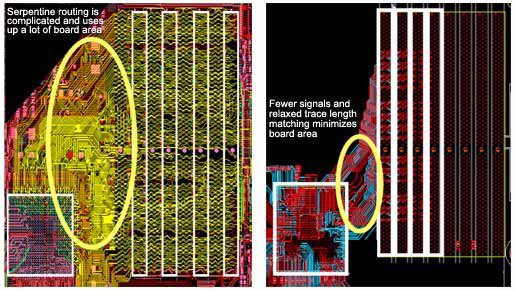

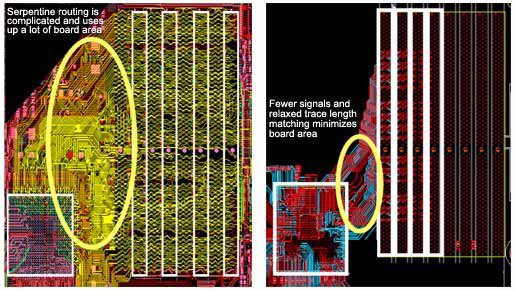

A third motivation for turning towards FB-DIMM is that it only uses 69 traces per channel on the motherboard. An ordinary DDR2 channel requires 240 traces, which need to be (nearly) the same length – a frustrating situation for motherboard designers. Opening a computer and looking at the part between CPU and memory banks reveals that certain traces wriggle and wind their way from one side to the other, which is meant to slow the signal down. FB-DIMM has the chipset compensate for differences in length, and the combination with the smaller number of traces means that in practice, there is room for two or three times the number of channels of equal or smaller complexity.

Left: one channel of DDR2, excluding power | Right: two channels of FB-DIMM, including power

The final issue that has been tackled is reliability: ECC fault correction is no longer just applied to data, but also addresses and instructions. Moreover, a transaction can be retried in case of an error without causing the processor or the operating system to panic. This involves supporting hotswapping and the switching off of data paths that prove unreliable. Although this decreases bandwidth, it keeps the system up and running.

Not everything about FB-DIMM is positive: a clear downside is the increased latency. Besides introducing a buffer as an extra step between processor and memory, the controller only has a direct connection with the first module on the channel. The remaining modules are only accessibly indirectly, which involves a three to five nanosecond delay (two or three clock cycles). By the time the eight module is accessed, something of an eternity has elapsed from the processor's perspective. The Opteron's NUMA architecture suffers from a similar drawback when data needs to be accessed from a module that is attached to the other socket. That adds thirty nanoseconds (about twenty cycles for DDR2-667) to the total access time.

Most manufacturers are working breadth-first rather than depth-first in applying the Xeon DP. The most popular configuration for Blackford servers is four channels with two or three modules each, so the extreme case of eight per channel has not yet appeared in practice. In case of heavy loads, the disadvantageous effect of higher latency per transaction is compensated by the fact that more actions can be executed simultaneously. For instance, reading and writing can be done simultaneously, and at each clock tick, instructions can be sent to three different modules per channel. This can bring average latency down as compared to DDR2 in case the system is under heavy pressure, but this is not expected to hold for all applications.

Another drawback of the buffer is that it can get fairly occupied: with an effective transmission speed of 3.2GHz (PC2-4200F) or 4.0GHz (PC2-5300F) in two different directions, few people will be surprised that this raises the power consumption of the module. We have found that every additional (533MHz) module costs an extra 7.6 Watt of power, regardless of the server load. On the other hand, the 1GB DDR2-667 modules of our Socket F Opteron used 1.9 Watt when idle and 2.4 Watt when loaded. This allows one to conclude that every FB-DIMM adds a good 5 Watts to the total consumption of the server, which makes for 40 Watts when using a total of eight modules.

It is expected that as more experience is gained with the design of buffers, power consumption will come down. However, frequency will have to go up at the same time to support faster memory, making for an everlasting battle. At least the memory controller does not seem to use so much power now that buffer chips are starting to take on a substantial range of functions. The maximum energy consumption of the 5000P chipset with four channels is specified at 30 Watts, but every channel adds only 1.75 Watts, making it appear as if the northbridge's power consumption resides largely in other functions.

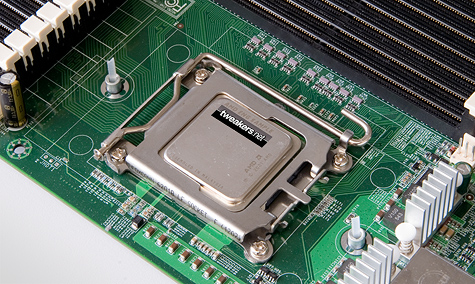

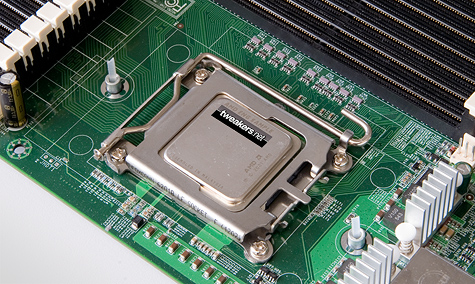

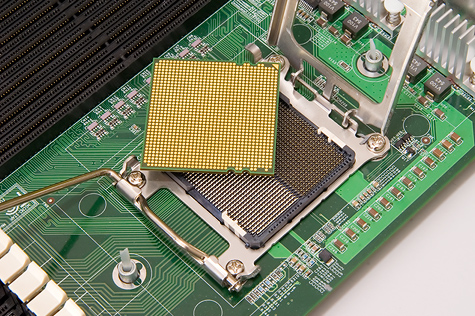

Test platform: Woodcrest and Dempsey

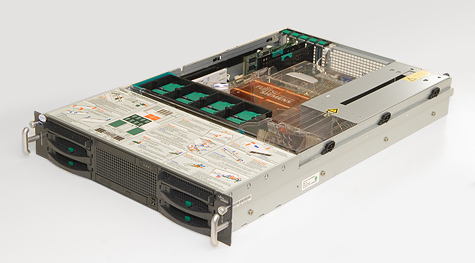

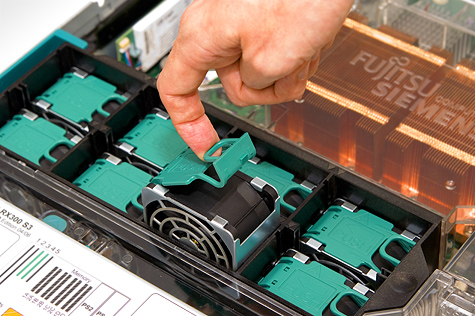

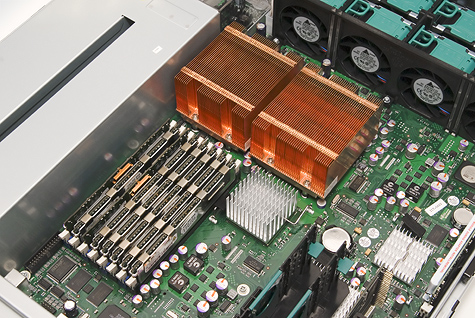

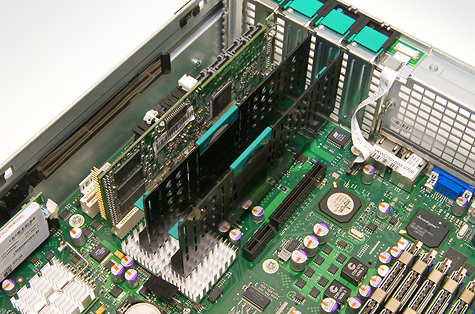

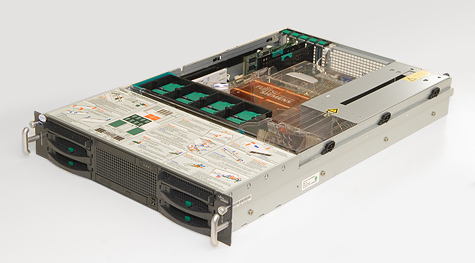

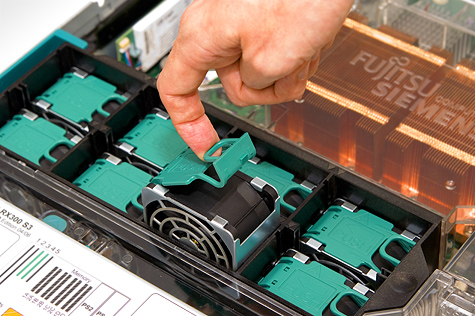

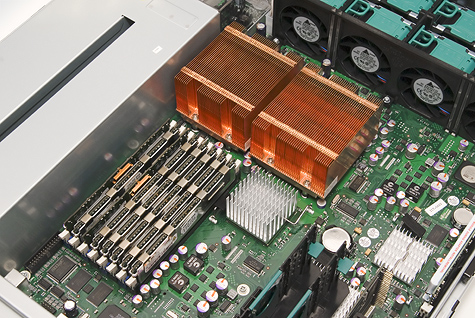

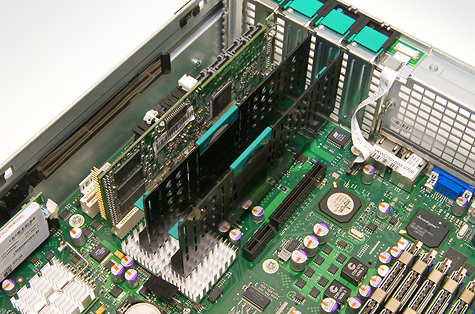

Fujitsu Siemens was kind enough to lend us a Primergy RX300 S3 in order to give the Woodcrest and the Dempsey a good grilling. This machine is a 19"/2U rack mounted server which, according to the company's marketing division, is suitable for 'demanding tasks in the areas of ERP and e-commerce'. The motherboard is based on the 5000P chipset and has two LGA771 sockets for Dempsey or Woodcrest, eight memory banks, one PCI Express x8 slot, two PCI Express x4 slots, and two 133MHz PCI-X slots. Other standard features include an eight port SAS controller that supports RAID levels 0 and 1. The option is available to extend this with a 256MB cache and support for RAID levels 5, 10, and 50. Other basic tools are an IDE connector for a dvd/cd drive and dual gigabit ethernet. The casing has room for two 600 Watt power supplies, six hard disks, eight coolers, an optical drive and a disk station. Among the supported operating systems we find Windows Server, VMWare ESX Server, Suse Enterprise Server and RedHat Enterprise Linux. More extensive specs can be found here.

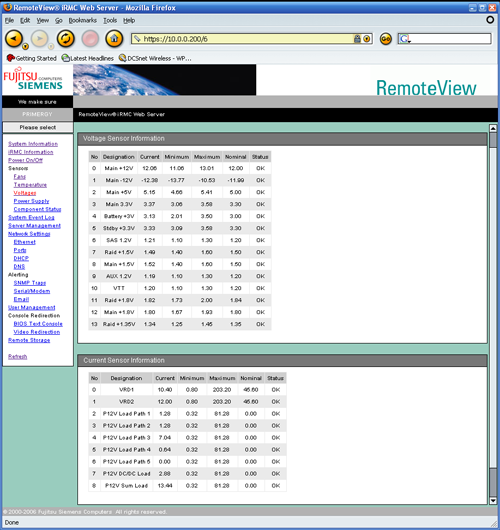

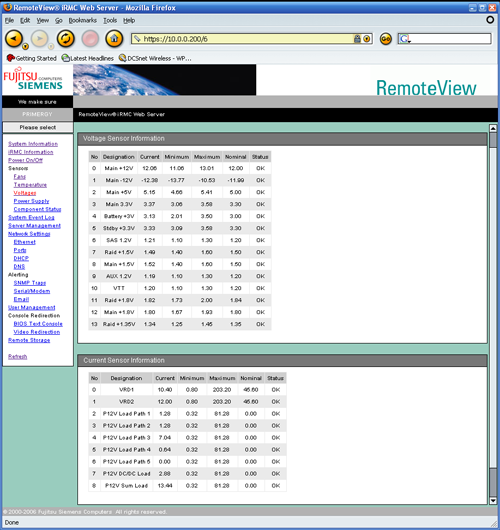

Like the Sun machines that we looked at earlier, the Fujitsu-Siemens can be accessed remotely for administration tasks, using a built-in web application called Remote View. This app provides extensive information about the hardware and can send various types of warnings in case it appears that something might go wrong.

Click the screenshot for a larger version. More images can be found

here.

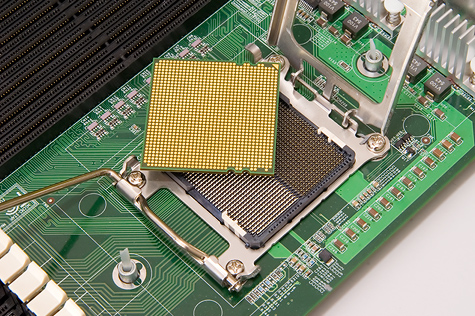

Our machine came with four Xeon processors, two of the 5080 mould and two 5150's. Although these model numbers are close together, the difference in specifications is like day and night: the former is the top model from the old series and the latter is the second fastest in the new series. Incidentally, the Woodcrests turned out to be samples of the B1 stepping instead of the commercially available B2 revision, but this is not expected to make for a noticeable difference.

| Xeon | 5080 | 5150 |

|---|

| Codename | Dempsey | Woodcrest |

| Architecture | Netburst | Core |

| Transistors | 374 million | 291 million |

| Die-size | 161mm² | 143mm² |

| Stepping | C1 | B1 |

![]() |

|---|

|

| Clock speed | 3.73GHz | 2.66GHz |

| Bus | 1066MHz | 1333MHz |

| L2-cache | 2x2MB | 4MB |

| TDP | 130W | 65W |

![]() |

|---|

| Price | $851 | $690 |

For both processors, the same memory was used: fully buffered DDR2-533 with a CAS latency of four ticks (PC2-4200F CL4). We used a total of eight modules – six times 1GB and twice 512MB – for a total capacity of 7GB.

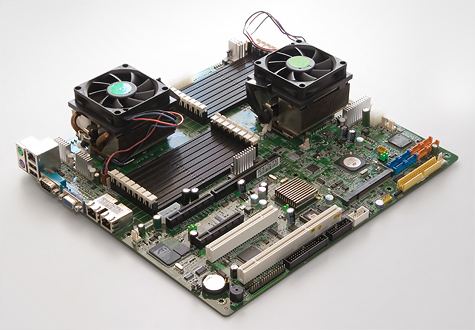

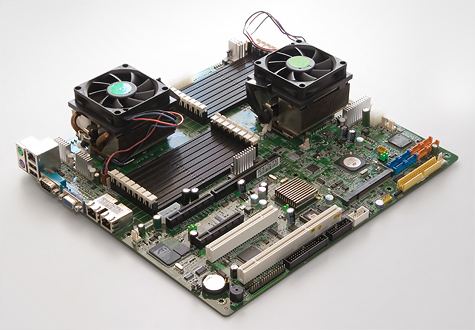

Test platform: Socket F and the rest

The results of the Opteron with DDR2 that have been used in this review are the same as those that were used in an earlier article. They were obtained using a MSI K9SD Master A8R and two dualcore Socket F Opterons clocked at 2.4GHz. The board uses a Serverworks chipset and has a PCI Express x8 slot, a 133MHz PCI-X slot and a standard PCI slot. A fourth slot can be selected by the customer: depending one the riser card used, the fourth slot is either PCI Express x8 or an HTX connection (HyperTransport).

On board we find two times gigabit ethernet and eight times SATA (four of the Serverworks chipsets with support for RAID 0 and 1, and four Adaptec AIC-8130 sets supporting RAID 0, 1, and 10). An integrated ATi Radeon Mobility takes care of the imagery. However, it is especially the large number of memory slots, totalling sixteen, that makes this board stand out. Unfortunately, stability problems with our preproduction hardware made it impossible to put more than four modules to work. We ended up testing with only 4GB of RAM, less than the other systems had at their disposal. The few results that we managed to obtain with the 8GB Socket F system allow for the conclusion that performance loss due to the lower memory capacity was about two percent, which needs to be taken into consideration when reading the diagrams.

Beside the Socket F board we shall encounter the results of two other familiar faces in this article: the Sun X4200 and T2000 from our UltraSparc T1 vs. Opteron review. For completeness, we list all specifications below.

| Merk | Intel | Intel | AMD | AMD | Sun |

|---|

| Processor | Xeon | Xeon | Opteron | Opteron | UltraSparc |

| Model | 5080 | 5150 | 2216 | 280 | T1 |

| Code name | Dempsey | Woodcrest | Santa Rosa | Italy | Niagara |

| Architecture | Netburst | Core | K8 | K8 | Sparc |

![]() |

|---|

| Clock speed | 3.73GHz | 2.66GHz | 2.4GHz | 2.4GHz | 1.0GHz |

| Socket type | 771 (J) | 771 (J) | 1207 (F) | 940 | 1933 |

| Bus | 1066MHz | 1333MHz | - | - | - |

| L2-cache | 2x2MB | 4MB | 2x1MB | 2x1MB | 3MB |

| TDP | 130W | 65W | 95W | 95W | 79W |

| Price | $851 | $690 | $698 | $851 | - |

![]() |

|---|

| Server brand | Fujitsu | Fujitsu | MSI | Sun | Sun |

| Servertype | RX300 S3 | RX300 S3 | K9SD Master | Fire X4200 | Fire T2000 |

| Height | 2U | 2U | - | 2U | 2U |

| Number of sockets | 2 | 2 | 2 | 2 | 1 |

| Number of cores | 4 | 4 | 4 | 4 | 8 |

![]() |

|---|

| Memory type | FBD | FBD | DDR2 | DDR | DDR2 |

| Channels | 4 | 4 | 4 | 4 | 4 |

| Frequency | 533MHz | 533MHz | 667MHz | 400MHz | 533MHz |

| CAS (cycles) | 4 | 4 | 5 | 3 | 4 |

| CAS (nanoseconds) | 7.5 | 7.5 | 7.5 | 7.5 | 7.5 |

| Capacity | 7GB | 7GB | 4GB | 8GB | 16GB |

![]() |

|---|

| Operating system | Linux 2.6 | Linux 2.6 | Linux 2.6 | Linux 2.6 | Solaris 10 |

![]() |

|---|

| Storage controller | Areca | Areca | Areca | Areca | LSI |

| Type | ARC-1120 | ARC-1120 | ARC-1120 | ARC-1120 | SAS1064 |

| Cache | 128MB | 128MB | 128MB | 128MB | - |

| Interface | PCI-X | PCI-X | PCI-X | PCI-X | PCI-X |

| Disks | Raptor 73GB | Raptor 73GB | Raptor 73GB | Raptor 73GB | SAS 73GB |

| Number of disks | 2 | 2 | 2 | 2 | 2 |

| RAID | JBOD | JBOD | JBOD | JBOD | JBOD |

Benchmark description

The goal of our benchmark is simulating the load that Tweakers.net (excluding the forum) exerts on the database under normal circumstances. The product version – the one that just assisted the author in producing the current page of this review – runs a MySQL 4.0 installation with close to two hundred tables, which vary substantially in size (ranging from a few kilobytes to several gigabytes) and activity. The database runs on a dedicated system and gets its instructions from a load-balanced cluster of web servers. This separation of data and web apps is based on a classic 'two-tier' pattern.

The web servers are supplied with PHP 4.4 and use the standard MySQL libraries. Depending on which page is requested, the web servers fire different queries of widely varying complexity at the database. Some queries are directed at a single table while others access four or more. The WHERE clauses tend to be quite short: in most cases only filtering according to a particular key takes place (such as the number of a news item or product) . In a few cases additional operations like sorting or page numbering are performed.

The benchmark purposely ignores regular maintenance, in part to reduce the duration of the test but also to minimise the number of write operations (UPDATE, DELETE, and INSERT), which in turn minimizes the influence of the storage system on the performance. The active part of the database encompasses only a few gigabytes, allowing systems with 4GB to 8GB to take the entire working set into memory, so that even with 2GB there is not much disk activity. This puts the emphasis of operation on the processors and memory. A further difference with the production environment is that in the tests, whole series of requests are handled in a single batch, while normally, every page requires a new connection with the database to be initiated. Cutting out that step allows us to load the database more severely and in a more controlled fashion.

The test database is a backup of the production database that gets loaded in MySQL 4.1.20 and 5.0.20a. It is imported in a CVS version of PostgreSQL 8.2, which, insofar as we have been able to determine, is fully stable. The latter has had a couple of its indexes replaced to allow better performance. Additionally, a few data structures were altered. Other than the server that is being grilled at present, the lab setup consists of three machines: two Appro web servers (dual Xeon 2.4GHz with 1GB of memory) to generate requests, and a third machine to process the results. Gigabit ethernet ties the machines together.

In our test, a visitor is represented by a series of pageviews that are handled by Apache 2.2 and PHP 4.4. On average, a series of requests totals 115, with variations due to varying probabilities of responding to a news item or entering a price in the Pricewatch section of this site. To be precise, every 'visitor' requests the following pages, which are chosen at random unless stated otherwise.

| Number | Description |

|---|

| 34 | Standard frontpage |

| 1 | Dynamic frontpage (subscriber's function) |

| 18 | Most recent news items |

| 7 | Random news items |

| 2 | Reviews |

| 13 | Overview of Pricewatch categories |

| 14 | Pricewatch price tabels |

| 2 | Adverts (V&A) |

| 2 | Product surveys |

| 6 | Software updates |

| 14 | XML feeds |

![]() |

|---|

| 5% probability | Enter a new price |

| 2.5% probability | Post reaction to the most recent news item |

Although this may not be entirely natural, we believe it to be an acceptable approximation of reality, with enough variation and load to make the database sweat. The requests are put in a random order to get an unpredictable pattern fired at the database. To prevent the web servers from becoming a bottleneck, as little as possible is done with the responses. The final result is that the number of page views that gets executed in a time span of exactly ten minutes (measured with Apache-bench). Although not every page is the same, even the slowest of runs request over ten thousand of them, which means we can feel quite safe concerning the comparability of the results from a statistical point of view.

A complete session involves runs with varying numbers of simultaneous visitors, ranging from one to a hundred. Each web server simulates half the number of visitors. The database machine fires the starting shot, so that it can register the start and end times itself. We are considering doing this with a separate control system in the future, but at the moment it does not appear to have a significant influence on the result if we leave it to the database machine. Each session is kicked off by a warming-up round with 25 simultaneous visitors, to get memory and cache filled up. This ensures that the first run is not at a disadvantage. After every run, the database is cleaned up by removing the newly entered prices and reactions (a 'vacuum' command in the case of PostgreSQL). After that, the machine gets a thirty second break to prepare for the next attack.

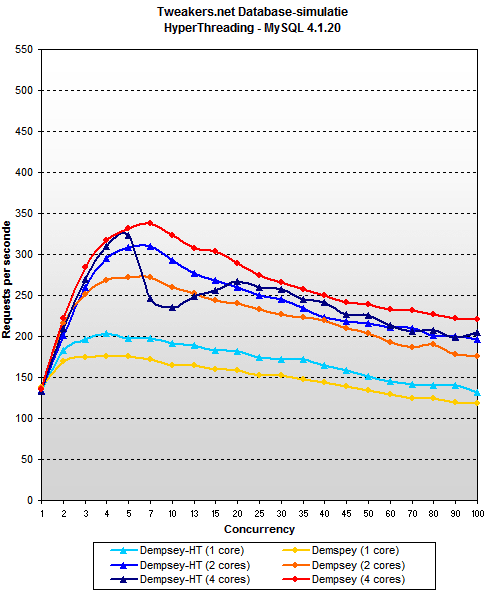

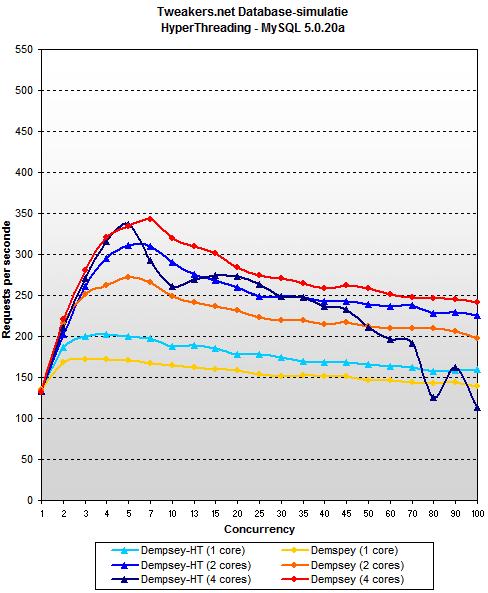

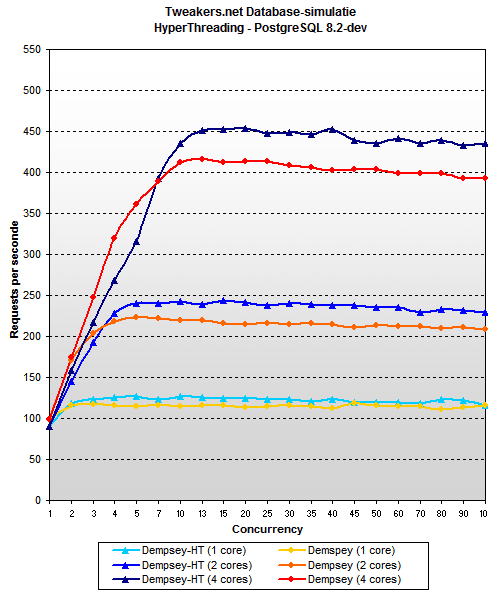

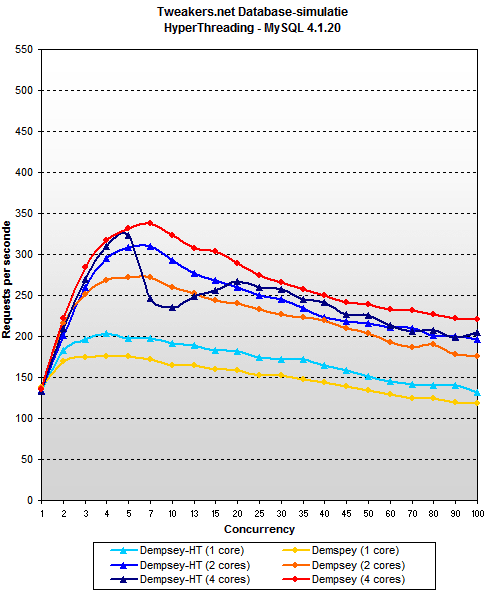

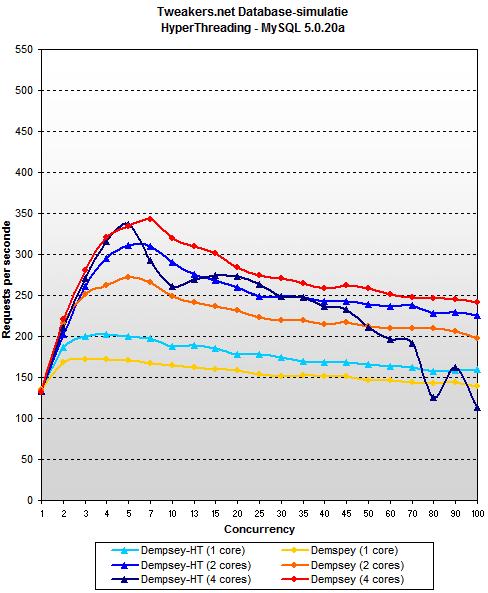

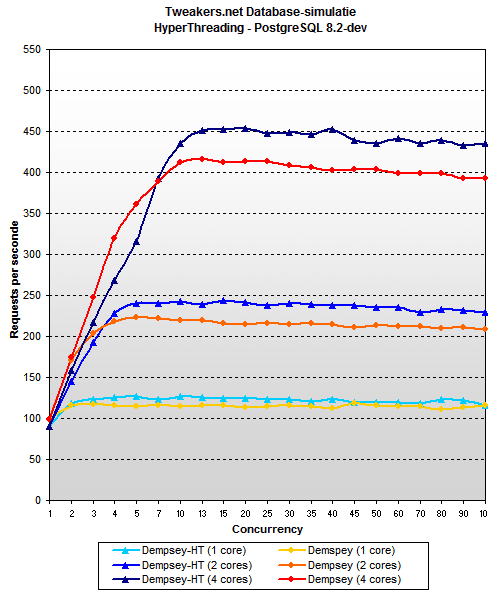

Influence of HyperThreading

HyperThreading has always been a somewhat controversial feature, in part because the theoretical advantage can only be achieved by avoiding a few practical disadvantages, which is not an easy thing to achieve for every application. In our first article we were forced to conclude that MySQL and virtual cores is not a good combination, but the technical reason for this was unclear. Since Blackford is a drastic revision of the platform and Dempsey – although still based on Netburst – is quite a bit faster, we have again posed the question if HyperThreading can be useful for our situation.

This time, the result are not consequent. For MySQL the feature appears to lead to performance gains: as long as one or two cores are used the performance is on average 10% better, which is quite significant. However, with four cores the story is different: version 4.1.20 loses about 7% of its performance and 5.0.20a runs a good 20% slower with HyperThreading switched on.

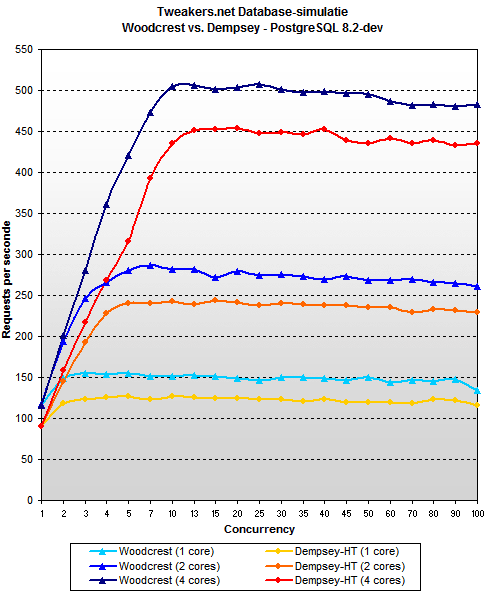

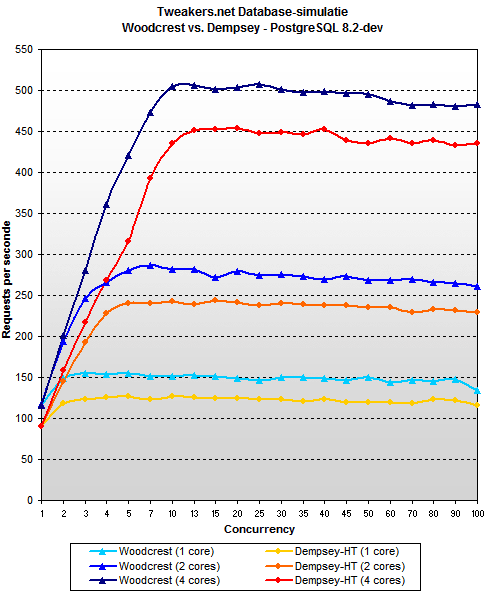

PostgreSQL is yet another story. In our earlier article on Sun's UltraSparc T1, it had already proved to upscale better than MySQL, but now it also turns out that PostgreSQL has no problems with HyperThreading. Averaged over 25 or more simultaneous visitors, there is an 8% performance increase when HyperThreading is used.

Normally, we like to keep as many setting as possible constant during testing, but the wildly varying results with and without HyperThreading were to great to ignore. For this reason, we chose to show the MySQL benchmarks without HyperThreading and the PostgreSQL benchmarks with HyperThreading. We assume that a competent sysadmin will be able to test whether or not it pays off to have it switched on for his applications, so we have similarly chosen the best setting depending on ours.

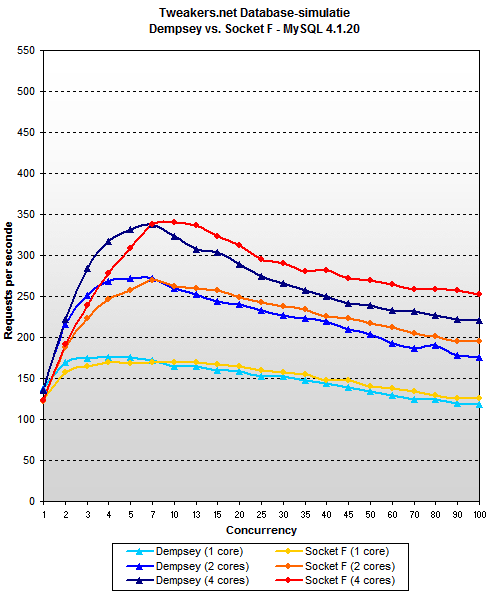

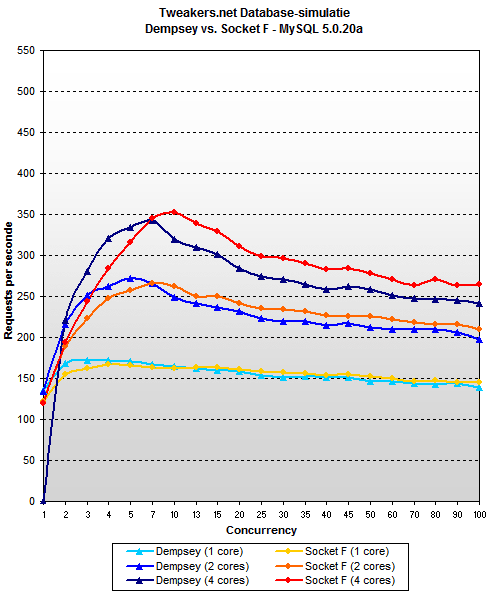

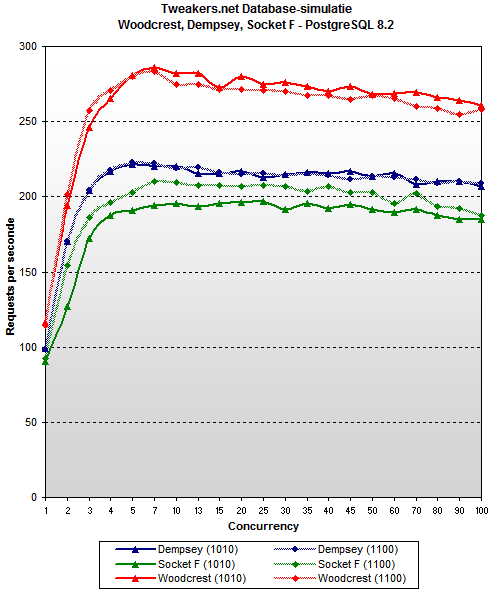

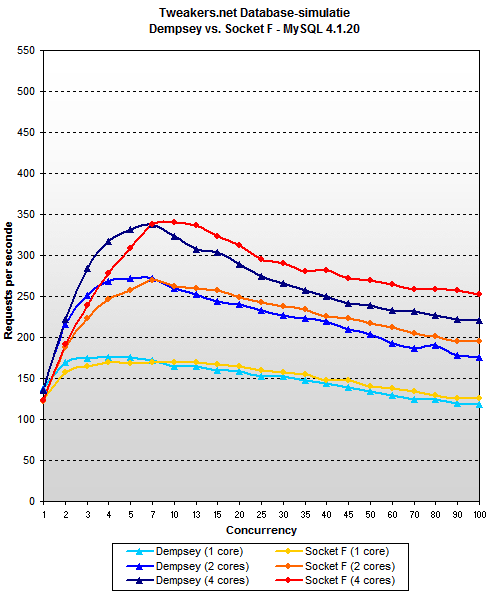

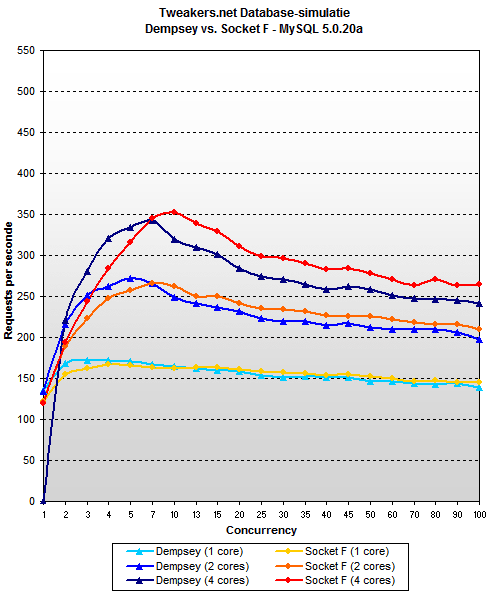

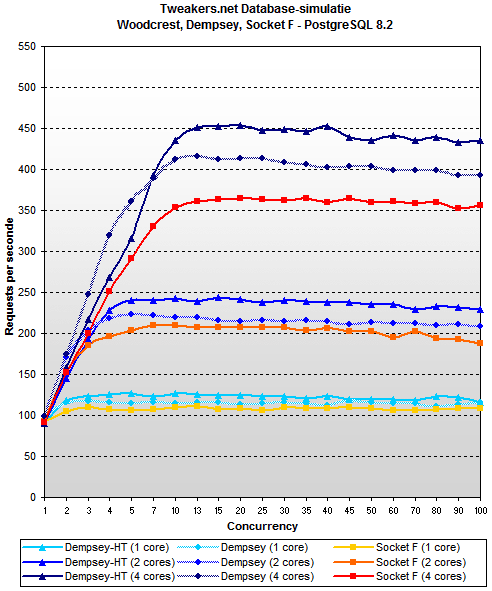

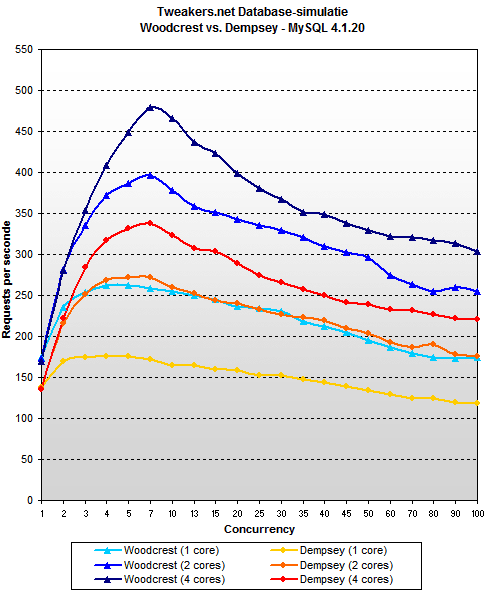

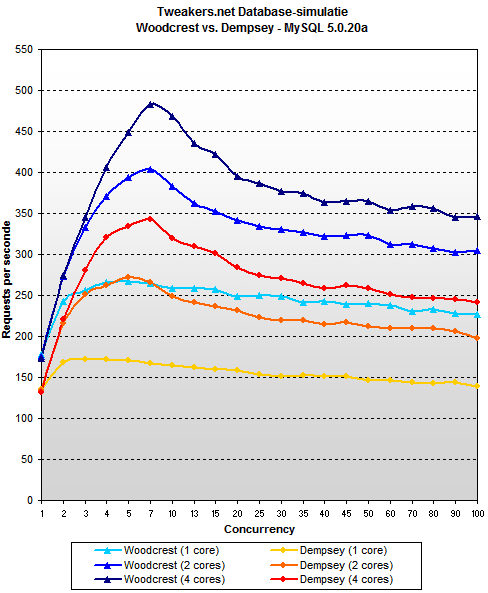

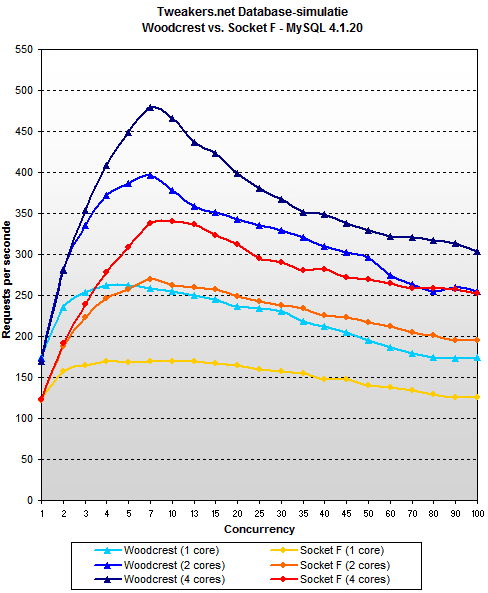

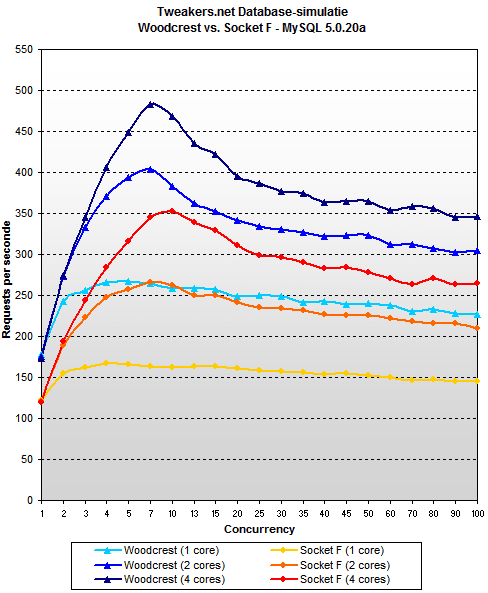

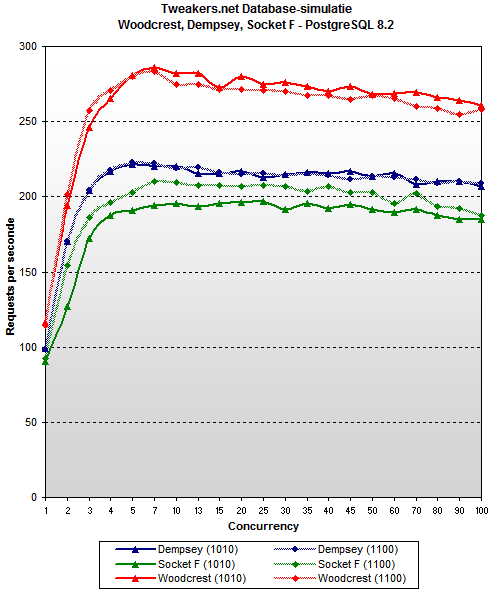

Opteron vs. Dempsey

The first thing we want to examine is how the Xeon Dempsey performs compared to the Opteron. After all, this enables us to see how the old Netburst-architecture, armed with a good chipset, fares against the competition. As it turns out, not very well: under heavy load, de 2.4GHz Opteron is on average 12% faster than the 3.73GHz Demspey in MySQL 4.1.20. In MySQL 5.0.20a, the difference is somewhat smaller but 9% is still a clear win for AMD. Still, the advantage of the Opteron is not as great as it was at the beginning of this year, when the old Xeon 'Paxville', in conjunction with the Lindenhurst chipset was the Opteron's sole competitor. The almost tripled bandwidth that comes with Blackford apparently makes up quite a bit even without Woodcrest. It may not yet be a victory for Intel but at least there is less reason for red faces in Santa Clara.

In PostgreSQL Dempsey achieves a substantial victory against the Opteron. The reason, besides the 1.3GHz faster clock speed, appears to be good use of HyperThreading: with a core each, the servers put down similar performances but moving to two and four cores sees the Xeon gain more ground than the Opteron. In the end the Intel system outperforms the AMD-setup by 22%.

All in all, Dempsey does reasonably well, but it has to be taken into consideration that this is a test between the quickest Dempsey and a subtop Opteron, where AMD could have fared somewhat better with 4GB of extra memory. Intel's new chipset puts an end to the embarrassing backlog that was apparent at the start of this year, but the combination with the Netburst-Xeon does not yet make for a persuasive lead.

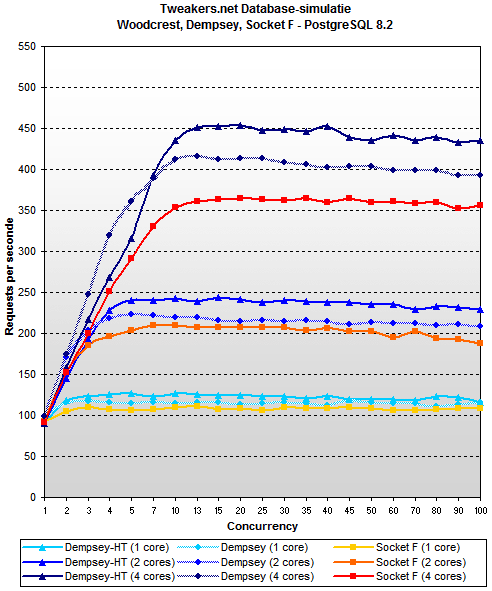

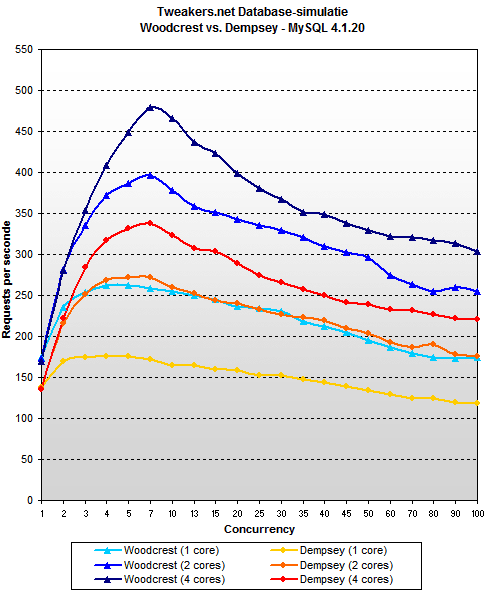

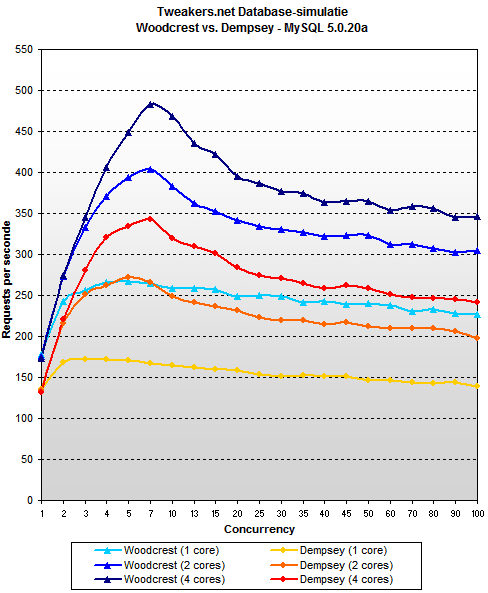

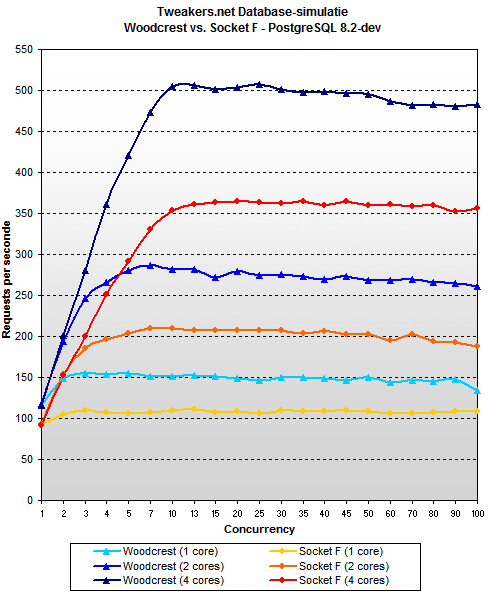

Woodcrest vs. Dempsey

With Blackford having established a solid basis in conjunction with Dempsey, it is time to take a look at the extras that Woodcrest can put into the equation. That turns out to be more than small potatoes: under heavy load it performs some 39% better in MySQL 4.1.20 and 42% better in MySQL 5.0.20a than the Dempsey. The battle is hardly fair anymore: two Woodcrest cores at 2.66GHz beat four Dempsey cores at 3,73GHz with one hand behind their backs. However, the scaling properties of the new architecture are a little disappointing, since the step from two to four cores raises the performance by only 15%. Although MySQL generally fails to scale up well, the Woodcrest is still, relatively speaking, on the slow side of the spectrum. This can possibly be remedied by using faster memory: with the Woodcrests we used the same 533MHz modules as with the Dempsey, while modules clocked at 667MHz would offer 25% more bandwidth, and, perhaps more importantly, run in sync with the 1333MHz bus.

In PostgreSQL Dempsey was already getting good results, and Woodcrest does not surpass that by a great amount. Still, the result is not bad, because in spite of the fact that Woodcrest lacks HyperThreading (which gave Dempsey an 8% advantage), it performs some 11% better on average. Moreover, the new Xeon has some extra headroom: our 2.66GHz version of the Woodcrest is not the top-of-the-line model, while the 3.73GHz Dempsey leads in both the price and the performance class of the Netburst line. Here too, faster memory might up the numbers some more.

Woodcrest vs. Opteron

Summary of results (performance)

This summary of test results is based on the averages of measurements obtained under heavy load (25 to 100 simultaneous users). The reason for omitting the lighter loads is to give each system the opportunity to reach its maximal potential, which makes the differences more pronounced than if the 'startup phase' had also been included. The numbers do not stand for pageviews per second like in the diagrams on the previous pages, but the total of what is achieved during a full ten minute run.

|

| Average performance MySQL 4.1.20 - concurrency 25+ |  |

|

| ![[*]](https://tweakimg.net/g/nblt.gif) Woodcrest Woodcrest |    201337 201337 |  |

|

| Opteron (DDR) |    179714 179714 |  |

|

| Opteron (DDR2) |    162609 162609 |  |

|

| Dempsey |    145327 145327 |  |

|

| Dempsey-HT |    135615 135615 |  |

|

| UltraSparc T1 |    92125 92125 |  |

|

|

| Average performance MySQL 5.0.20a - concurrency 25+ |  |

|

| ![[*]](https://tweakimg.net/g/nblt.gif) Woodcrest Woodcrest |    217675 217675 |  |

|

| Opteron (DDR) |    178866 178866 |  |

|

| Opteron (DDR2) |    167101 167101 |  |

|

| Dempsey |    153747 153747 |  |

|

| Dempsey-HT |    121594 121594 |  |

|

| UltraSparc T1 |    53997 53997 |  |

|

|

| Average performance PostgreSQL 8.2-dev - concurrency 25+ |  |

|

| ![[*]](https://tweakimg.net/g/nblt.gif) Woodcrest Woodcrest |    295083 295083 |  |

|

| Dempsey-HT |    264699 264699 |  |

|

| Dempsey |    241023 241023 |  |

|

| Opteron (DDR) |    219639 219639 |  |

|

| Opteron (DDR2) |    216108 216108 |  |

|

| UltraSparc T1 |    177907 177907 |  |

|

The tables below give the relative performance of Woodcrest, Dempsey, and Socket F. These are based on the same measurements as were used for the tables given above, and can be seen as percentual representations relating the performance of a single processor to the performance of the others. For example, the number 1.39 in the table below indicates that the Woodcrest offers 1.39 times the performance of Dempsey in MySQL 4.1.20, in other words, that it is 39% faster.

| Woodcrest | MySQL 4.1.20 | MySQL 5.0.20a | PostgreSQL 8.2-dev | Average |

|---|

| Dempsey | 1.39 | 1,42 | 1,11 | 1.31 |

| Opteron (DDR) | 1.12 | 1.22 | 1.34 | 1,23 |

| Opteron (DDR2) | 1.24 | 1,30 | 1,37 | 1,30 |

| UltraSparc T1 | 2.19 | 4.03 | 1.66 | 2.63 |

| Dempsey | MySQL 4.1.20 | MySQL 5.0.20a | PostgreSQL 8.2-dev | Average |

|---|

| Woodcrest | 0.72 | 0.71 | 0.90 | 0.78 |

| Opteron (DDR) | 0.81 | 0.86 | 1.21 | 0.96 |

| Opteron (DDR2) | 0.89 | 0.92 | 1.22 | 1.01 |

| UltraSparc T1 | 1.58 | 2.85 | 1.49 | 1.97 |

| Socket F | MySQL 4.1.20 | MySQL 5.0.20a | PostgreSQL 8.2-dev | Average |

|---|

| Woodcrest | 0.81 | 0.77 | 0.73 | 0.77 |

| Dempsey | 1.12 | 1.09 | 0.82 | 1.01 |

| Opteron (DDR) | 0.9 | 0.93 | 0.98 | 0.94 |

| UltraSparc T1 | 1.77 | 3.09 | 1.21 | 2.02 |

As a bonus, we take a look at the average performance per database. PostgreSQL, mostly thanks to its good scaling properties, gets scores that are over 50% higher than MySQL. The peeks of the two databases are closer together than the diagram below suggests, but because MySQL's performance degrades after the highest point while PostgreSQL retains more or less the same level, the difference gets bigger with 25 simultaneous users and more. To work out the averages, only Dempsey’s top scores have been taken into consideration, which means no HyperThreading for MySQL 4.1 and 5.0 and HyperThreading for PostgreSQL.

|

| Average performance per database |  |

|

| PostgreSQL 8.2-dev |    234687 234687 |  |

|

| MySQL 4.1.20 |    156222 156222 |  |

|

| MySQL 5.0.20a |    154277 154277 |  |

|

Summary of results (scaling properties)

In addition to absolute performance rates, it is interesting to examine the scaling properties of the different processors, i.e. the gains that can be made by adding extra cores. Again, we only consider the heavy load situations, since that is where the extra computing power is most appropriate. There is only one way to measure the performance with one to four cores, but for two cores there are two possibilities: either two processors use one core, or one (dualcore) processor is used. In spite of the seemingly substantial influence this might have on memory access (Opteron) and the amount of cache per core (Woodcrest), the difference turns out to be small. Since a choice needs to be made, we have selected the best result of the two - like we did on the previous pages.

|

| Scaling behaviour Woodcrest |  |

|

| MySQL 4.1.20 (from 1 to 2 cores) |      47% 47% |  |

|

| MySQL 4.1.20 (from 2 to 4 cores) |      15% 15% |  |

|

| MySQL 5.0.20a (from 1 to 2 cores) |      34% 34% |  |

|

| MySQL 5.0.20a (from 2 to 4 cores) |      14% 14% |  |

|

| PostgreSQL 8.2-dev (from 1 to 2 cores) |      84% 84% |  |

|

| PostgreSQL 8.2-dev (from 2 to 4 cores) |      82% 82% |  |

|

|

| Scaling behaviour Dempsey* |  |

|

| MySQL 4.1.20 (from 1 to 2 cores) |      42% 42% |  |

|

| MySQL 4.1.20 (from 2 to 4 cores) |      19% 19% |  |

|

| MySQL 5.0.20a (from 1 to 2 cores) |      44% 44% |  |

|

| MySQL 5.0.20a (from 2 to 4 cores) |      21% 21% |  |

|

| PostgreSQL 8.2-dev (from 1 to 2 cores) |      95% 95% |  |

|

| PostgreSQL 8.2-dev (from 2 to 4 cores) |      88% 88% |  |

|

|

| Scaling behaviour Socket F |  |

|

| MySQL 4.1.20 (from 1 to 2 cores) |      48% 48% |  |

|

| MySQL 4.1.20 (from 2 to 4 cores) |      25% 25% |  |

|

| MySQL 5.0.20a (from 1 to 2 cores) |      44% 44% |  |

|

| MySQL 5.0.20a (from 2 to 4 cores) |      21% 21% |  |

|

| PostgreSQL 8.2-dev (from 1 to 2 cores) |      85% 85% |  |

|

| PostgreSQL 8.2-dev (from 2 to 4 cores) |      80% 80% |  |

|

|

| Scaling behaviour Socket 940 |  |

|

| MySQL 4.1.20 (from 1 to 2 cores) |      50% 50% |  |

|

| MySQL 4.1.20 (from 2 to 4 cores) |      28% 28% |  |

|

| MySQL 5.0.20a (from 1 to 2 cores) |      45% 45% |  |

|

| MySQL 5.0.20a (from 2 to 4 cores) |      23% 23% |  |

|

| PostgreSQL 8.2-dev (from 1 to 2 cores) |      90% 90% |  |

|

| PostgreSQL 8.2-dev (from 2 to 4 cores) |      81% 81% |  |

|

The differences are not huge, but nevertheless present. Especially in MySQL, Woodcrest appears weak compared to the Opterons. The performance improvement that is obtained by doubling the number of cores diminishes quickly in all CPUs, but where AMD manages to gain 21% to 28%, the Xeon cannot achieve more than 15% better performance. Of course Intel has the upper hand of a strong core, but for the future it could see problems with quadcores and systems with four or more sockets.

|

| Average per processor* |  |

|

| Woodcrest (from 1 to 2 cores) |      55% 55% |  |

|

| Woodcrest (from 2 to 4 cores) |      37% 37% |  |

|

| Dempsey (from 1 to 2 cores) |      60% 60% |  |

|

| Dempsey (from 2 to 4 cores) |      43% 43% |  |

|

| Opteron (DDR) (from 1 to 2 cores) |      62% 62% |  |

|

| Opteron (DDR) (from 2 to 4 cores) |      44% 44% |  |

|

| Opteron (DDR2) (from 1 to 2 cores) |      62% 62% |  |

|

| Opteron (DDR2) (from 2 to 4 cores) |      43% 43% |  |

|

|

| Average per database* |  |

|

| MySQL 4.1.20 (from 1 to 2 cores) |      48% 48% |  |

|

| MySQL 4.1.20 (from 2 to 4 cores) |      22% 22% |  |

|

| MySQL 5.0.20a (from 1 to 2 cores) |      43% 43% |  |

|

| MySQL 5.0.20a (from 2 to 4 cores) |      21% 21% |  |

|

| PostgreSQL 8.2-dev (from 1 to 2 cores) |      89% 89% |  |

|

| PostgreSQL 8.2-dev (from 2 to 4 cores) |      83% 83% |  |

|

*) In all Dempsey tests, HyperThreading was switched off in MySQL, but for PostgreSQL it was switched on

Power, prices, and conclusion

So far, we have concentrated on processor performance, but these days, one hears the expression 'performance per watt' quite a lot. We have examined four complete server systems during the running of the benchmarks to see how much power they consumed under load. We are not talking about absolute maximums here, but rather the consumption under a realistic sort of pressure. Since we only had a separate motherboard available for the Socket F Opteron (instead of a complete server as was the case for the other processors), we have not taken it into consideration here. Instead, we assume that the Socket 940 version should give a fair picture of how AMD servers generally perform in the energy realm. Presumably, the Socket F version will need less power due to the use of DDR2 memory, but that advantage will partially evaporate due to the fact that its performance is slightly less. The performances per Watt have been obtained by looking at the average number of pageviews per ten minutes under a load of 25 to 100 users, and dividing this by the amount of Watts measured.

|

| Absorbed power under load |  |

|

| Primergy RX300 S3 (Dempsey) |    447 447 |  |

|

| Fire X4200 (Opteron (DDR)) |    341 341 |  |

|

| ![[*]](https://tweakimg.net/g/nblt.gif) Primergy RX300 S3 (Woodcrest) Primergy RX300 S3 (Woodcrest) |    294 294 |  |

|

| Fire T2000 (UltraSparc T1) |    232 232 |  |

|

| Performance/Watt | MySQL 4.1.20 | MySQL 5.0.20a | PostgreSQL 8.2-dev |

|---|

| Woodcrest | 685 | 740 | 1004 |

| Opteron (DDR) | 527 | 524 | 644 |

| Dempsey | 325 | 344 | 592 |

| UltraSparc T1 | 397 | 233 | 766 |

|

| Average performance |  |

|

| ![[*]](https://tweakimg.net/g/nblt.gif) Woodcrest Woodcrest |    238032 238032 |  |

|

| Opteron (DDR) |    192740 192740 |  |

|

| Dempsey |    187924 187924 |  |

|

| Opteron (DDR2) |    181939 181939 |  |

|

| UltraSparc T1 |    108010 108010 |  |

|

|

| Average performance per Watt |  |

|

| ![[*]](https://tweakimg.net/g/nblt.gif) Woodcrest Woodcrest |    810 810 |  |

|

| Opteron (DDR) |    565 565 |  |

|

| UltraSparc T1 |    465 465 |  |

|

| Dempsey |    420 420 |  |

|

On average, the new Xeon offers the best performance per Watt: it is almost 93% more than the Netburst-based Dempsey, 43% more than the AMD Opteron, and 74% more than the Sun UltraSparc T1. Although these figures are not representative for all the various servers and applications, we can conclude hat Woodcrest is quite an efficient chip, which seems to be hardly bothered by its external memory controller and warmer FB-DIMM modules at the system level. The average performance gains of 23% to 31% above the competition further add to the good impression that the CPU makes. Another significant factor is the price of the processors. Even though this is only a small part of the total cost of a server, the pricelist nevertheless gives a good indication of the processors' various positioning:

| Socket F Opteron | Woodcrest | Dempsey |

|---|

| | | | | | | 3.0GHz (95W) | $177 |

|---|

| | | 1.66GHz (65W) | $209 | | |

| 1.8GHz (95W) | $255 | 1.86GHz (65W) | $256 | | |

| 1.8GHz (68W) | $316 | 2.0GHz (65W) | $316 | 3.2GHz (130W) | $316 |

| 2.0GHz (95W) | $377 | | | 3.2GHz (95W) | $369 |

| 2.0GHz (68W) | $450 | 2.33GHz (65W) | $455 | | |

| 2.2GHz (95W) | $523 | | | | |

| 2.2GHz (68W) | $611 | | | | |

2.4GHz (95W) 2.4GHz (95W) | $698 |  2.66GHz (65W) 2.66GHz (65W) | $690 | | |

| 2.4GHz (68W) | $768 | | | | |

| 2.6GHz (95W) | $873 | 3.0GHz (80W) | $851 |  3.73GHz (130W) 3.73GHz (130W) | $851 |

| 2.8GHz (120W) | $1165 | | | | |

Here, too, things do not look too bright for AMD. For every Opteron one can find a similarly or lower priced Woodcrest, that is faster and/or more energy efficient. In these tests, we did not consider the top models, but it is not the case that one brand has more headroom than another. For instance, going up a step on the price ladder makes the difference in clock speed 400MHz as opposed to 266MHz. The 'special edition' Opteron at 2,8GHz approaches the top model Woodcrest, but it is 314 dollars more expensive and comes with higher power consumption as an extra bonus.

Beside the processor price, the cost of memory is of course also important. Since FB-DIMM requires an extra component (the buffer chip), it is expected to be more expensive than ordinary DIMM at the same clock speed. In other words, the choice for Woodcrest implies extra 'hidden' costs. However, it is difficult to determine exactly how big the difference is. Our Pricewatch section lists 1GB DDR2-667 from about 100 euros, while the equivalent FB-DIMM is priced at almost 160 euros. That keeps the Opteron interesting for those who want to assemble their own machines and want plenty of memory at little cost. But those who do business with one of the large manufacturers see a different picture. Well-known brands ask exorbitant prices for memory, whether it is DDR(2) or FB-DIMM does not make an awful lot of difference. At IBM, however, the only large manufacturer at the time of publication that sells both DDR2-667 and FB-DIMM, the buffered version is a little cheaper. Dell, too, offers a fair deal on FB-DIMM in relation to DDR2-667-modules from IBM and Sun.

| Prices of 1GB memory |

|---|

| Manufacturer | Registered DIMM | FB-DIMM (667MHz) | Difference |

|---|

| Dell | $190 (DDR2-400) | $228.50 | 20.3%  |

| IBM | $255 (DDR2-667) | $249 | 2.4%  |

| HP | $254.50 (DDR400) | $274.50 | 7.9%  |

| Apple | $315 (DDR2-533) | $350 | 11.1%  |

| Sun | $247.50 (DDR2-667) | - | - |

The introduction of Blackford and Woodcrest allows Intel some breathing space for the time being. At last the Opteron has a more than worthy competitor, that outperforms it in terms of performance, power efficiency, and price. AMD can consider itself lucky that Intel's new architecture is not suitable for four sockets at the moment – the market segment where AMD's gains have been most significant – since in the other areas Intel's main competitor appears to have been taken completely by surprise. The fact that there is no immediate answer from AMD does not mean that the battle is over. Both companies are working hard on the development of quadcores. Intel aims to release its 'Clovertown' within a few months and AMD is due to release a four core CPU mid-2007.

AMD is also working on an improved K8 version under the codename K8L. As far as is currently known, this will not introduce many radical new features as in the Core architecture, but it does share certain important characteristics with Core, such as 128 bit broad multimedia Units. However, according to the latest gossip, the K8L design will not be entering the field until the beginning of 2008. That would mean that the first quadcore server chips will be based on the current K8 design.

We hope to find out soon if Core is strong enough to stay ahead over the next few years. Woodcrest appears to be rock-solid, but the disappointing scaling characteristics may cause Intel problems switching to quadcores and four socket systems. Even if that goes smoothly AMD might pull a rabbit out of its hat in the form of K8L. So, the future is hardly carved in stone, but for now the choice for us was easy: in part on the basis of these benchmarks we have ordered a dual 3GHz Woodcrest server for our forum database.

Acknowledgements

Acknowledgements

Tweakers.net wishes to thank Fujitsu-Siemens (Woodcrest en Dempsey), MSI (Socket F motherboard), Adata (DDR2 memory), Sun (Socket 940 Opteron and UltraSparc T1), AMD, and Intel for their cooperation to this article. Also, we want to thank Mick de Neeve for the English translation and once again ACM and moto-moi for executing the benchmarks and giving valuable information which aided the interpretation and the description of the results.

Tweakers.net wishes to thank Fujitsu-Siemens (Woodcrest en Dempsey), MSI (Socket F motherboard), Adata (DDR2 memory), Sun (Socket 940 Opteron and UltraSparc T1), AMD, and Intel for their cooperation to this article. Also, we want to thank Mick de Neeve for the English translation and once again ACM and moto-moi for executing the benchmarks and giving valuable information which aided the interpretation and the description of the results.

Previous articles in this series

Previous articles in this series

30-7-2006: AMD Socket F

30-7-2006: AMD Socket F

27-7-2006: Sun UltraSparc T1 vs. AMD Opteron (Dutch)

27-7-2006: Sun UltraSparc T1 vs. AMD Opteron (Dutch)

19-4-2006: Xeon vs. Opteron, single- en dualcore (Dutch)

19-4-2006: Xeon vs. Opteron, single- en dualcore (Dutch)

Tweakers.net wishes to thank

Tweakers.net wishes to thank :fill(white):strip_exif()/i/2000831982.jpeg?f=thumbmedium)

:fill(white):strip_exif()/i/2000831636.jpeg?f=thumbmedium)

:fill(white):strip_exif()/i/2000831669.jpeg?f=thumbmedium)